The rise of large language models (LLMs) like ChatGPT and Claude has brought a new challenge: How to manage and deploy these complex systems in real-world settings. That’s where LLMOps comes in.

LLMOps, which stands for Large Language Model Operations, includes the tools, practices, and workflows needed to develop, fine-tune, monitor, and maintain LLMs. As LLMs become more common in business, product development, and research, LLMOps helps teams keep everything running smoothly.

Let’s learn about LLMOps with our quick guide to LLMops WeCloudData, your go‑to academy for AI, ML and data science training.

What is LLMOps?

Let start our guide to LLMops with the crux of the issue, what is it? LLMOps is a specialized version of MLOps, but focused specifically on LLMs. While MLOps helps teams manage machine learning models in production, LLMOps does the same for LLMs, which are much larger and more complex.

To understand this better, let’s quickly define the two main terms in our guide to LLMops:

- LLMs (Large Language Models) are deep learning models trained on massive amounts of text. They can generate and understand human-like language. These models have billions of parameters and are capable of tasks like answering questions, summarizing documents, and generating code.

- MLOps (Machine Learning Operations) is a set of practices used to manage the lifecycle of machine learning models, from training and testing to deployment and monitoring.

LLMOps is built on MLOps, but with additional tools to handle the unique needs of large language models.

Breaking Down the Term: LLMOps = LLM + Ops

- LLM (Large Language Model): A type of AI model trained on a vast amount of text. LLMs can generate text, answer questions, summarize documents, and more.

- Ops (Operations): The process of managing something in production. In this case, it’s about ensuring LLMs work well in real-world systems, reliably and securely.

Why the Need of LLMOps?

LLMs differ from traditional machine learning models. They are larger, more complex, and behave differently based on their use. These differences mean that typical tools for machine learning projects are no longer sufficient.

For example:

- LLMs require large amounts of computing power and memory.

- They can be fine-tuned or updated with new data or prompts.

- Their outputs can be unpredictable or biased.

- Their performance is hard to measure using standard accuracy metrics.

Because of this, organizations need a structured way to manage them, from building to deployment. That’s what LLMOps is designed for.

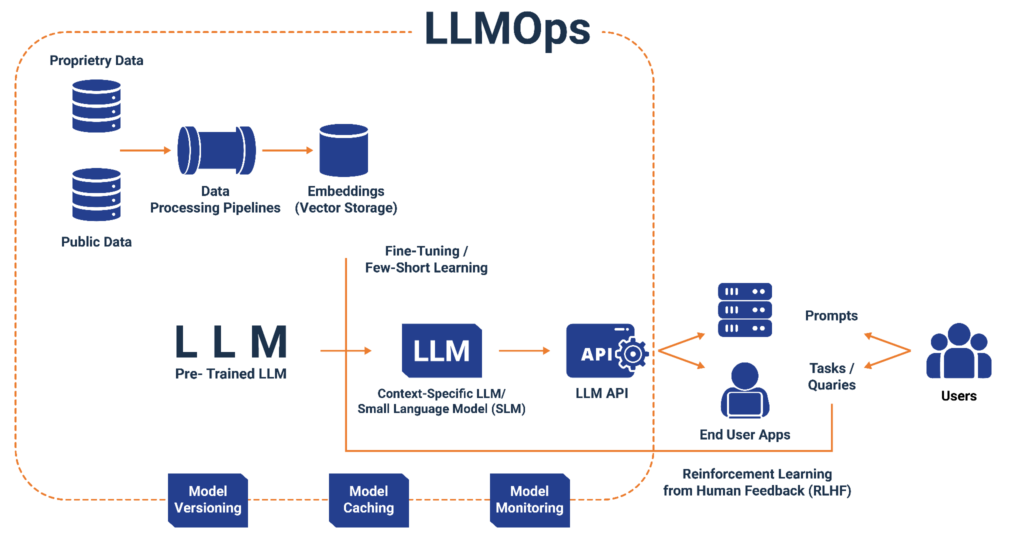

What Steps are Involved in LLMOps?

While LLMOps shares some similarities with MLOps, the process of building applications with large language models is different in key ways. The main difference is the use of foundation models. Instead of training models from scratch, LLMOps focuses on customizing and adapting these pre-trained LLMs for specific tasks or applications.

LLMOps involves a number of different steps, including:

1. Data Management

LLMs rely on data. LLMOps covers collecting, cleaning, storing, and versioning datasets. It ensures the right data is used during training or fine-tuning and tracks changes over time.

2. Prompt Engineering

Many LLMs are used with prompts that guide the model. LLMOps tools help teams test, track, and version these prompts.

3. Fine-Tuning and Adaptation

Instead of training models from scratch, companies typically adapt pre-trained models to meet their needs. This can be done through fine-tuning or techniques like LoRA, QLoRA, or retrieval-augmented generation (RAG). LLMOps simplifies managing these adaptations.

4. Evaluation and Testing

Unlike traditional models, LLMs generate text, which is challenging to measure. LLMOps includes special tools to evaluate output quality using metrics like BLEU, ROUGE, or custom scoring systems.

5. Deployment

Once the model is prepared, it must be deployed via an app or API. LLMOps ensures this occurs securely, quickly, and cost-effectively.

6. Monitoring

After deployment, it’s crucial to monitor how the model performs. LLMOps tracks metrics like latency, token usage, unusual outputs, and model drift.

7. Security and Governance

The LLMOps helps teams set up access controls, audit logs, and privacy protections, especially for sensitive business data.

LLMOps vs MLOps: What’s the Difference?

LLMOps is a specialized subset of MLOps (machine learning operations), which focuses specifically on the challenges and requirements of managing LLMs. While MLOps covers the general principles and practices of managing machine learning models, LLMOps addresses the unique characteristics of LLMs, such as their large size, complex training requirements, and high computational demands.

| Category | MLOps | LLMOps |

|---|---|---|

| Model type | Small to medium-sized models | Massive language models |

| Training | Often trained from scratch | Typically fine-tuned |

| Output | Numbers or classifications | Natural language text |

| Evaluation | Accuracy, precision | BLEU, ROUGE, human feedback |

| Infrastructure | Moderate | GPU-heavy, large-scale |

| Challenges | Versioning, scaling | Prompt tracking, hallucinations, latency |

Why LLMOps Is Becoming Essential

Several trends are pushing companies to adopt LLMOps.

- LLMs are growing fast. More businesses want to use models like GPT-4, Claude, or Mistral in their workflows.

- Custom applications need fine-tuning. One-size-fits-all LLMs don’t always work. Companies want to personalize them.

- Costs need to be controlled. Running LLMs is expensive. LLMOps helps reduce waste and improve performance.

- Governance is critical. Organizations need to ensure ethical use, compliance, and model security.

According to Google Cloud, LLMOps brings order to the complexity of working with large models at scale.

What Tools and Platforms Support LLMOps?

There are many platforms now offering LLMOps support:

- Databricks: Helps manage fine-tuning, evaluation, and deployment on a shared platform.

- Google Cloud Vertex AI: Offers LLM tuning, pipelines, and monitoring tools.

- IBM watsonx.ai: Focuses on foundation models and prompt engineering.

- Weights & Biases (W&B): Great for prompt testing, LLM chaining, and model tracking.

- Domino Data Lab: Adds observability and reproducibility to LLM workflows.

These platforms help teams scale up LLM use without chaos.

Learn with WeCloudData

This concludes our quick guide to LLMops and if this interests you then join WeCloudData, North America today. WeCloudData offers hands-on training to help professionals build real-world AI and data skills including LLM workflows, prompt engineering, and model deployment.

If LLMOps interests you, check out our:

- LLM Bootcamp

- AI/ML Bootcamp

- MLOps Engineering Track

- Python for Data Science Course

- Projects & Mentorship Program

Explore all our offerings at weclouddata.com.

Our instructors are industry experts, and our programs focus on skills that companies actually look for. Whether you’re starting out or upskilling for a job, our training can help you get there faster.