The Operating LLMs in Production course provides a comprehensive understanding of deploying and managing LLMs in production environments. This course is designed for both NLP engineer, LLM engineer and MLOps professional seeking to master LLM operations. Participants will gain hands-on experience in setting up cloud-based AI infrastructure, utilizing GPU clusters, and leveraging distributed training and inference techniques. Participants will gain hands-on experience in optimizing and scaling LLMs for real-world applications.

Contact us to discuss schedules, formats, and custom programs for your team.

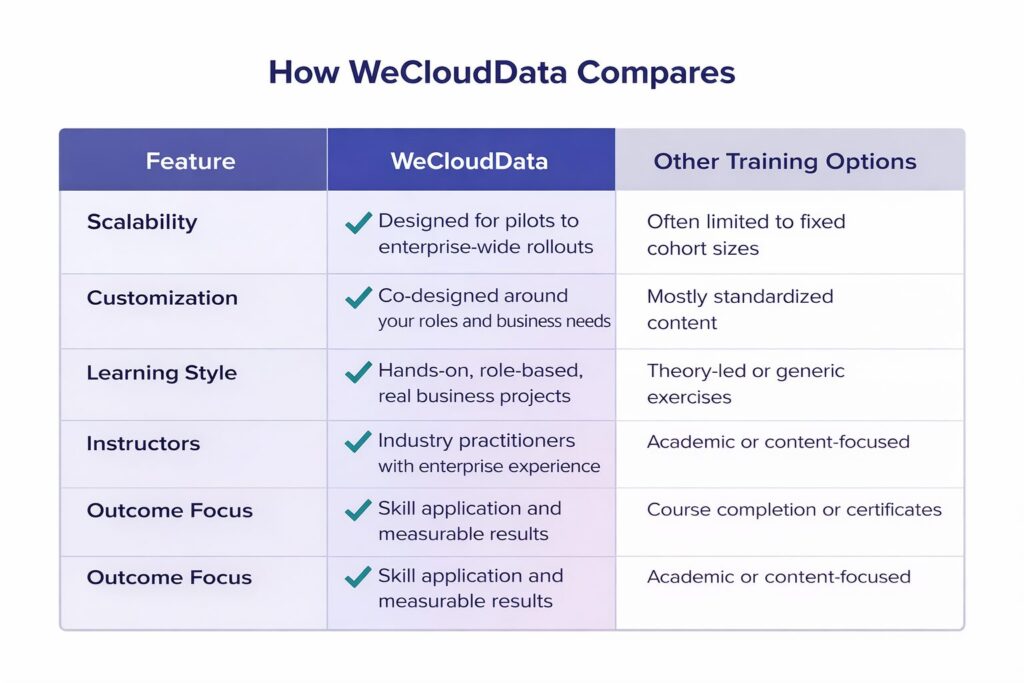

Built for real enterprise training.

WeCloudData programs are designed to scale with your organization, adapt to your business needs, and deliver practical, role-based skills that teams can apply immediately.