Fast deployment, scalable systems, and reproducibility are more important than ever in the fast-paced world of software, data science, machine learning (ML), and DevOps. This is where Docker shines. It helps developers build, share, run, and verify applications anywhere, without tedious environment configuration or management.

This blog gives you a beginner-friendly intro to Docker, including the fundamentals of containers, Docker architecture, its function in cloud computing, and how it’s influencing processes in AI, ML, and other fields. Let’s discover the world of Docker with WeCloudData!

What Is Docker?

Docker is an open-source platform that enables developers to build, deploy, run, update, and manage applications in any environment using containers.

Containers are lightweight, independent executable components including all the requirements for an application to run, like code, libraries, dependencies, and environment settings. Unlike traditional virtual machines (VMs), containers don’t require a separate OS. Instead, they share the host system’s kernel making them faster, more portable, and more efficient.

How Docker Powers AI, ML, Data Science, and DevOps

Imagine you are a data scientist developing a model. On your system, your code works flawlessly, but in production, it doesn’t work. Why? Different settings. It addresses this issue by guaranteeing that your entire application stack operates uniformly everywhere. It guarantees that models trained in Jupyter Notebooks with particular Python libraries function the same on cloud servers in AI/ML processes.

Docker facilitates standardized pipelines for integration, testing, and deployment for DevOps teams. Docker key benefits include;

- Reproducibility in data experiments.

- Simplified model deployment.

- Consistency across dev, test, and production.

- Faster, isolated training environments.

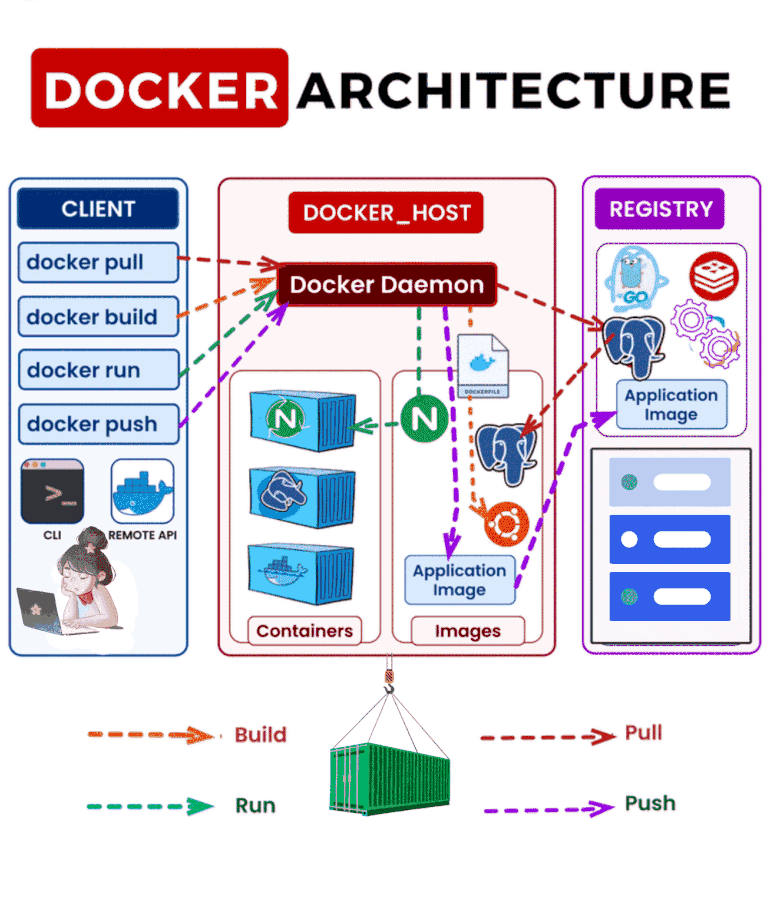

How Docker works- Docker Architecture

Docker is an operating system for containers. Similar to how a virtual machine eliminates the need for direct server hardware management, containers also virtualize a server’s operating system. Every server has Docker installed, which provides basic commands for creating, launching, and terminating containers.

Docker containers can be easily run and managed at scale with AWS Fargate, Amazon ECS, Amazon EKS, and AWS Batch.

Docker Architecture Components

Let’s explore the core Docker architecture and its components.

1. Docker Engine

Docker Engine is the runtime that operates and controls containers. It consists of;

- Docker Daemon (dockerd): The Docker daemon (dockerd) listens for Docker API requests and manages Docker objects such as images, containers, networks, and volumes.

- Docker Client: The Docker client (docker) is the primary way that many Docker users interact with Docker. When you use commands such as docker run, the client sends these commands to dockerd, which carries them out. The Docker command uses the Docker API. The Docker client can communicate with more than one daemon.

- Docker CLI: The command-line interface for interacting with it.

- REST API: For programmatic communication.

2. Image

An image is a read-only template with instructions for creating a Docker container, such as Python environments that come with TensorFlow already installed. An image is based on another image, with some additional customization. For example, you may build an image which is based on the Ubuntu image, but installs the Apache web server and your application, as well as the configuration details needed to make your application run.

3. Containers

Containers are the running instances of Docker images. A container is a runnable instance of an image. You can create, start, stop, move, or delete a container using the Docker API or CLI. Despite being isolated, all containers share the host kernel.

4. Docker Hub

Docker Hub is a public cloud repository for locating, exchanging, and storing images.

5. Docker Compose

Docker Compose is the tool for running multi-container apps with a single configuration file. It’s perfect for comprehensive machine learning pipelines.

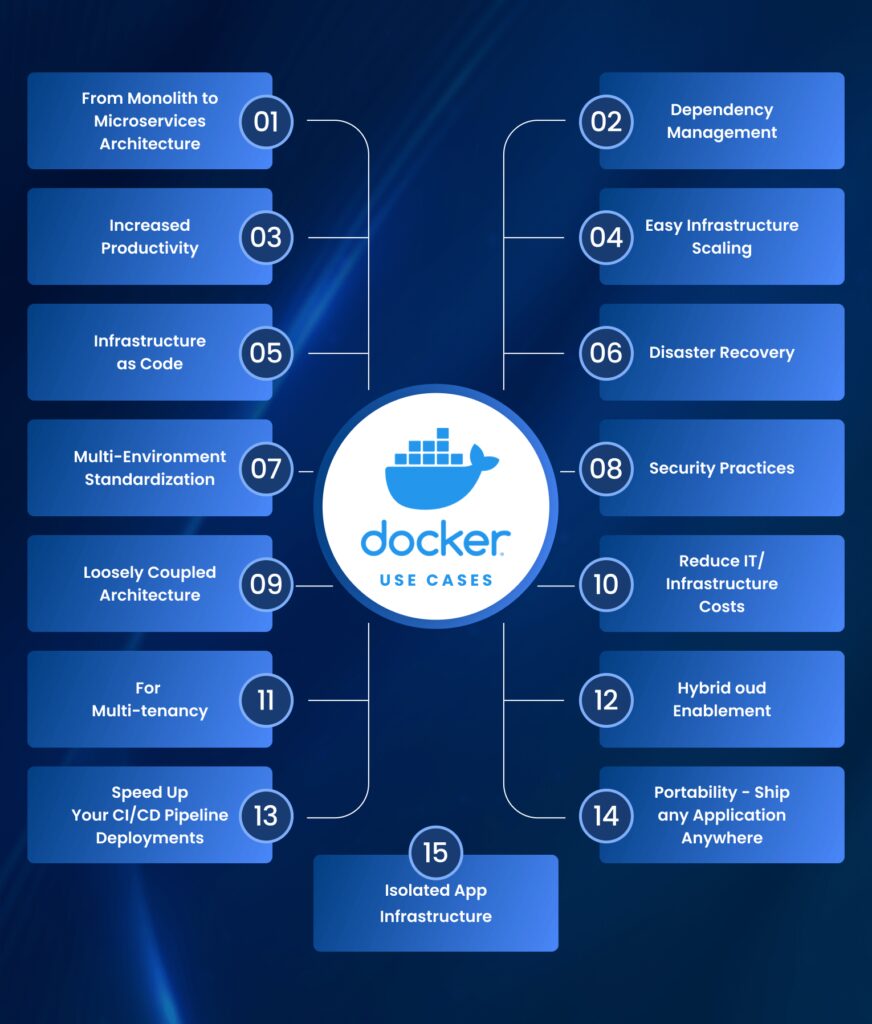

When to Use Docker

You can use Docker containers as a core building block, creating modern applications and platforms.

AI/ML and Data Science

Docker is a game-changer for AI, machine learning, and data science workflows:

Make reproducible environments: It allows you to bundle all of your dependencies in a container, including R, Python, Conda environments, Jupyter notebooks, and more. This fixes the “it worked on my laptop” issue by guaranteeing that your code operates in the same way on any computer.

Package and share machine learning models: Using technologies like Flask or FastAPI, you can containerize and serve trained models, facilitating a smooth deployment to cloud or edge environments.

Build full ML pipelines: Create complete machine learning pipelines by executing many steps in separate containers, including data ingestion, preprocessing, model training, and inference. Scaling, testing, and development are greatly facilitated by this modularity.

Web Development and Collaboration

For web developers, it removes environment mismatch headaches:

Standardize development setups: To avoid errors caused by different OS or dependency versions, every team member can run the same environment (such as Node.js, PostgreSQL, and Redis) in containers.

Sharing setups is simple: Colleagues only need to share a Dockerfile or docker-compose.yml to start your project right away; no manual setup is required.

Next Steps: Learn Docker with WeCloudData

Docker has become an essential tool for modern developers, data scientists, and DevOps professionals alike. Whether you’re building AI models, managing data pipelines, or deploying scalable applications, It simplifies the process and empowers you to work more efficiently.

If you’re ready to take the next step in your containerization journey after reading our intro to docker blog, WeCloudData offers a hands-on, beginner-friendly course designed to get you up and running with Docker fast. Learn how to build, run, and deploy containers in real-world scenarios guided by industry experts.

Enroll in our Introduction to Docker course and start mastering Docker today! Or if you are an organization looking to upskill its employees, we recommend you to explore our corporate AI upskilling program.