The blog is posted by WeCloudData’s student Amany Abdelhalim.

There are two steps that I followed to create this pipeline :

1) Collect Twitter Feeds and Ingest into DynamoDB

2) Copy the Twitter Data from DynamoDB to Hive

First: Collect Twitter Feeds and Ingest into DynamoDB

In order to create a pipeline where I collect tweets on a specific topic and write them in a DynamoDB Table.

I launched an EC2 instance and installed the following:

· Python3

· Python packages (tweepy, boto, awscli, extblob)

I created a table in DynamoDB that I will use to write the tweets to.

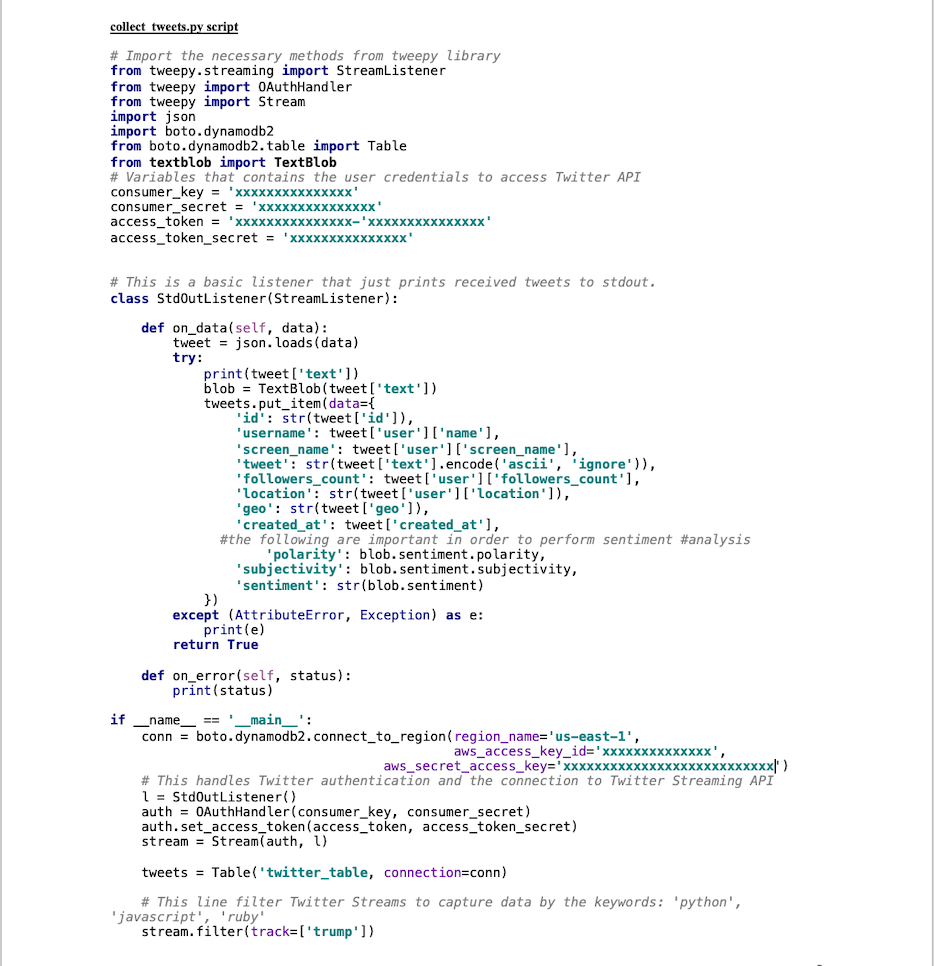

I prepared a script “collect_tweets.py “ that will collect tweets related to the “trump” topic and write them to a DynamoDB table. In the script I am extracting 11 fields from each tweet which are the id, user name, screen name, tweet text, followers , geo, created at, the sentiment of each tweet, polarity and subjectivity.

I copied the collect_tweets.py script from my local machine to the EC2 instance.

scp -i ~/privateKeyFile.pem collect_tweets.py ec2-user@ec2–xx–xxx–xxx–xxx.compute-1.amazonaws.com:/home/ec2-user

I ran the script on EC2 using nohup to ensure that the script runs in the background even if after disconnecting the ssh session.

nohup python3 collect_tweets.py 2>&1

I used tail to check if the script is working.

tail nohup.out

I checked twitter_table in DynamoDB and it has 2816 tweet records written to it before I stopped running the script on the EC2.

Second: Copying the twitter data from DynamoDb to hive

I Launched an EMR cluster, with the following tools Hadoop, Hive, Presto, HBase.

I connected to Hue and created 2 external tables and copied the data from the “twitter_table” from DynamoDB to the hive table“twitter_hive”.

The following is the “twitter_hive” table:

The following is the “twitter_ddb” table that was used to copy the data from the “twitter_table” from DynamoDB to the hive table “twitter_hive”.

I copied the data from the “twitter_ddb” table to the “twitter_hive” table.

I tested that the data was copied successfully to the hive table by performing some queries.

In the following query, I am selecting the first 10 records of the twitter_hive table.

Query:

select * from twitter_hive limit 10;

Output:

In the following query, I am selecting the sentiment, polarity and subjectivity form the first 10 records of the twitter_hive table.

Query:

Output:

In the following query, I am calculating the top 10 most popular hashtags in the twitter dataset

Query:

Output:

In the following query, I am checking which locations have the most number of tweets about “trump”.

Query:

Output:

In the following query, I am checking which locations have the most negative sentiment about trump.

Query:

Output:

In the following query, I am doing a word count to find a popular keyword in the dataset, and then calculating the average polarity of all the tweets that contain that keyword.

First, I did a word count to find a popular keyword in the dataset.

Query:

Output:

Second, I chose the word “Trump” that occurred 1124 times to calculate the average polarity of all the tweets that contain “Trump”.

Query:

Output:

Note: the tweets were used as is, where actually optimally they shouldn’t. Data should be cleaned before being used for analysis. One form of cleaning could be removing the stop words, another form of cleaning could be removing the mentions, ..etc.