Student Blog

The blog is posted by WeCloudData’s Big Data course student Laurent Risser. Toronto is known for its crazy housing market….

by Student WeCloudData

May 20, 2020

Blog

The blog is posted by WeCloudData’s Big Data course student Udayan Maurya.

Spark’s native ML library though powerful generally lack in features. Python’s libraries usually provide more options to tinker with model parameters, resulting in better tuned models. However, Python is bound on single compute machine and one contiguous block of memory, which makes it infeasible to be used for training on large scale distributed data-set.

Pandas — UDF (Get best of both worlds)

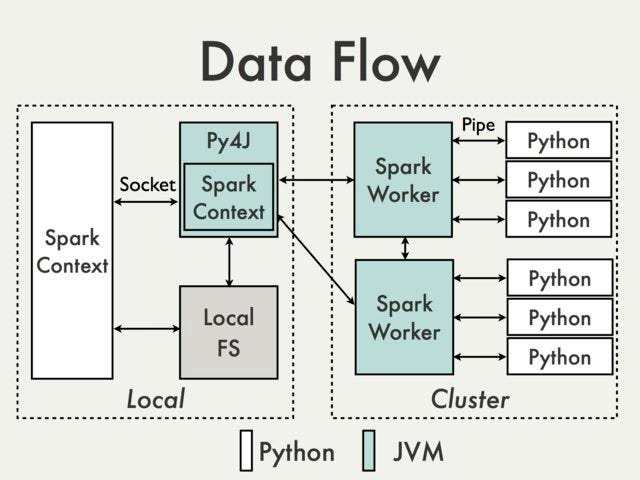

Pyspark performs computation through following data-flow:

We trigger our spark-context program on Master(“Local” in image here) node. The Spark program is executed on JVMs. Whenever during execution of code Spark encounters Python functions JVMs starts Python processes on working clusters and Python takes on the assigned task. Necessary data required for computation is pickled to Python and results are pickled back to JVM. Below is schematic data-flow for Pandas UDFs.

Pandas-UDF have similar data-flow. Additionally, to make the process more performance efficient “Arrow” (Apache Arrow is a cross-language development platform for in-memory data.) is used. Arrow allows data transfer from JVMs to Python processes in vectorized form, resulting in better performance in Python processes.

There is ample of reference material available for data munging, data manipulation, grouped data aggregation and model prediction/execution using Pandas UDFs. This tutorial serves the purpose of providing a guide to perform “Embarrassingly Parallel Model Training” using Pandas UDF.

Requirements

*I have used Databricks environment for this tutorial

To operate on Databricks File System we use dbutils commands. Create a temporary folder in your DBFS.

This creates a “temporary” directory in “dbfs:/FileStore”

We created two data-sets df1 and df2 to train models in parallel.

We are saving the data-sets in temporary folder from step 1 in zipped format. Zipped files are read in Saprk in one single partition. We are using this feature to keep different data-sets in separate partition, so we can train individual models on each of them.

As files are in zipped format we get one partition for each file. Resulting in total two partitions of SparkDF, instead of default 8. This facilitates in keeping all the data in one worker node and one contiguous memory. Therefore, Machine Learning model can easily be trained using Python libraries on each partition to produce individual models.

The UDF function here trains Scikit-Learn model Spark Data-frame partitions and pickle individual models to “file:/databricks/driver”. Unfortunately, we cannot pickle directly to “FileStore” in Databricks environment. But, can later move the model files to “FileStore”!

Create “sparkDF2” which call “train_lm_pandas_udf” on “sparkDF”, created in step 4.

To initiate model training we need to trigger action on sparkDF2. Make sure the action used to trigger touches all partitions otherwise training will only happen for partitions touched.

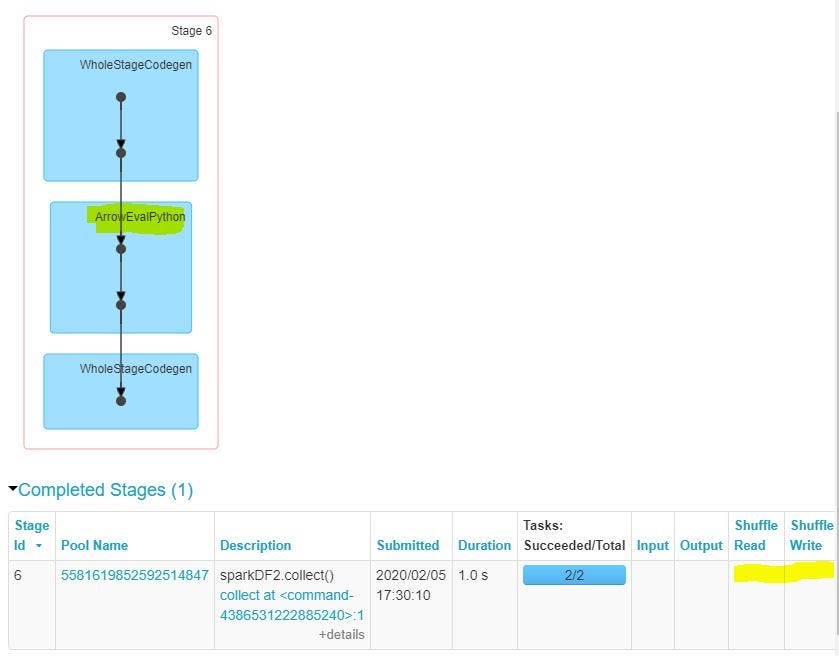

As evident form Job DAG, the columns are navigated to Python using Arrow, to complete the model training. Additionally, we can see that there is no Shuffle Write/Read due to Embarrassingly parallel nature of the job.

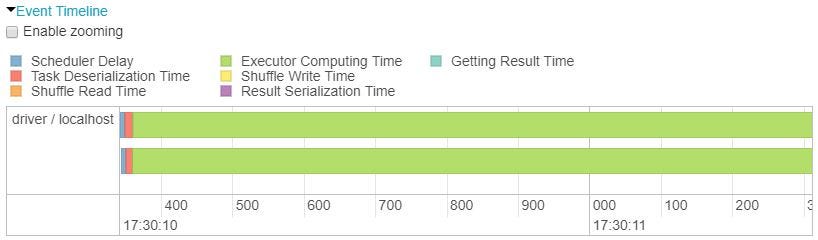

Above displays event timeline for stages of execution of spark job. Parallel execution reduces the time to train by 1/2! (which can be improved by improving on size of your cluster and training more models).

As we have trained models on dummy data, we have good idea of what model coefficients should be. So we can simply load model files and see if model coefficients make sense.

Evident from results above. Model coefficient for df1 is 2.55, which is close to our equation for df1: Y = 2.5 X + random noise. And for df2 it is 2.93 consistent with df2: Y = 3.0 X + random noise.

Full notebook and code and be downloaded from:

Review one use case of parallel model training here:

"*" indicates required fields

WeCloudData is the leading data science and AI academy. Our blended learning courses have helped thousands of learners and many enterprises make successful leaps in their data journeys.

"*" indicates required fields

Canada:

180 Bloor St W #1003

Toronto, ON, Canada M5S 2V6

US:

16192 Coastal Hwy

Lewes, DE 19958, USA