Blog, Learning Guide

Chatbots have revolutionized the way we engage with technology. Their effect is extensive, ranging from providing customer service to…

by WeCloudData

June 12, 2025

Blog

In a time when artificial intelligence is rapidly changing industries, the ability to develop AI applications locally, without depending on expensive cloud resources, has become essential for developers, data scientists, and businesses. On September 4, 2025, WeCloudData partnered with Docker to host a live webinar titled “Getting Started with Local AI Development,” featuring Mike Coleman, Staff Solutions Architect at Docker. This session attracted a global audience eager to learn how Docker’s tools can simplify AI workflows, allow local model running, and help scale to the cloud seamlessly.

As a prominent data and AI training academy based in Toronto, North America WeCloudData bridges the gap between new technologies and practical skills. With over eight years of experience, we have trained nearly 10,000 students and worked with more than 40 business clients across North America and beyond, including major Canadian banks, tech companies, and organizations in the US, China, UAE, Saudi Arabia, and Singapore. Our mission is to equip individuals and businesses with focused training in data science, machine learning, AI infrastructure, and more. Events like this webinar reflect our commitment to providing timely expert-led content that helps professionals stay ahead in the fast-changing AI field.

Similar to our recent “People-First AI: HR Microsummit,” which discussed AI’s role in human resources through engaging conversations on ethical implementation and talent management, this webinar highlighted practical, real-world applications. Attendees gained useful knowledge on using Docker for AI development, reinforcing WeCloudData’s reputation as a top resource for career-advancing education and corporate upskilling.

Mike Coleman brought extensive expertise to the session, based on his long career in tech. Currently a Staff Solutions Architect at Docker, Mike has previously held important roles at industry leaders like Google Cloud, AWS, VMware, and Microsoft. His path reflects a strong passion for helping developers tackle challenges in containerization, cloud computing, and now, AI integration.

Mike’s return to Docker after working there from 2015 to 2018 shows his dedication to the platform that popularized containers. At Docker, he focuses on helping teams adopt container-based AI workflows with confidence, moving from prototypes to production-ready applications. His practical approach aligns with WeCloudData’s hands-on training philosophy, where we emphasize real-world projects and capstone experiences to prepare students for roles in enterprises.

The webinar began with a brief introduction to WeCloudData by our host, highlighting our global presence and focus on customized training. We emphasized our pillars: in-house developed content, a strong learning platform for self-paced and instructor-led courses, personalized support, and capstone projects that have helped graduates secure positions as VPs, managers, and developers in major companies.

Transitioning to the main topic, Mike explained why local AI development is timely amid the AI boom. “AI has been taking a lot of space in the news cycle,” he noted, highlighting how containers like Docker speed up MLops (Machine Learning Operations) for quicker, more competitive deployments.

Mike clarified that Docker is not “just containers.” While Docker popularized container technology, it has developed into a complete ecosystem. With tools like Build Cloud for quicker cloud-based builds, Testcontainer Cloud for integration testing, Scout for security, and hardened images. The focus, however, was on Docker’s AI-specific features, particularly Docker Model Runner.

Docker Model Runner allows users to run Large Language Models (LLMs) locally on Apple Silicon MacBooks (M-series) or Windows machines with NVIDIA hardware and WSL2. It uses Llama.cpp to serve models. It has an OpenAI-compatible API endpoint, meaning no OpenAI key is required, but code written for it can easily transition to cloud services like OpenAI if necessary.

Mike shared a benchmarking story: “I benchmarked GPT-4 against local models and chose local because it was sufficient and cost-free.” This aligns with WeCloudData’s approach to efficient, scalable learning. Our courses teach students to prototype cheaply before scaling.

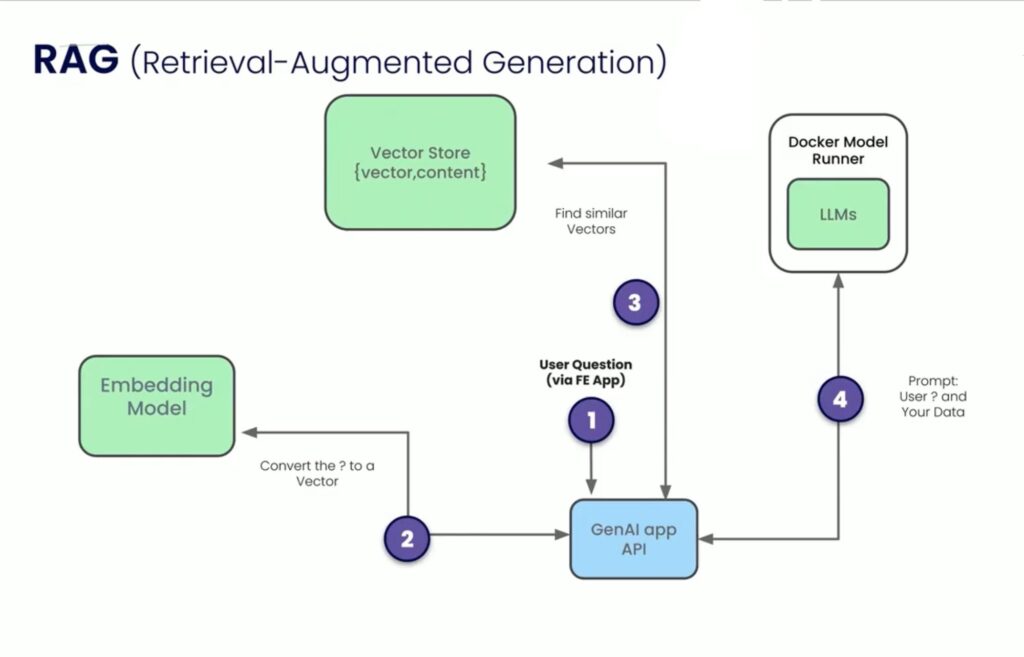

The main part of the session involved a live demo of building a RAG application using Docker. RAG enhances LLMs by adding custom data into prompts, improving accuracy without fully retraining the model.

Mike covered key concepts:

Using his GitHub repo , Mike showed:

1. Pulling models from Docker Hub (e.g., Llama 3.2 in GGUF format).

2. Running models via Docker Desktop’s beta Model Runner feature.

3. Embedding data into vectors using libraries like Sentence Transformers for semantic similarity (distinguishing “apple” as fruit or computer based on context).

4. Querying a vector database to retrieve relevant chunks and feed them to the LLM.

In the demo, Mike simulated a support ticket chatbot:

● Without RAG: Generic responses.

● With RAG: Specific answers from embedded tickets. For instance, troubleshooting a “car won’t start” query by checking the battery, starter, etc.

He addressed hardware limitations: “Performance depends on RAM and GPU-my MacBook shares 18GB RAM, while my NVIDIA PC has a more powerful GPU but limited VRAM.” Attendees appreciated the troubleshooting tips, which fit with WeCloudData’s capstone projects where students build similar AI applications.

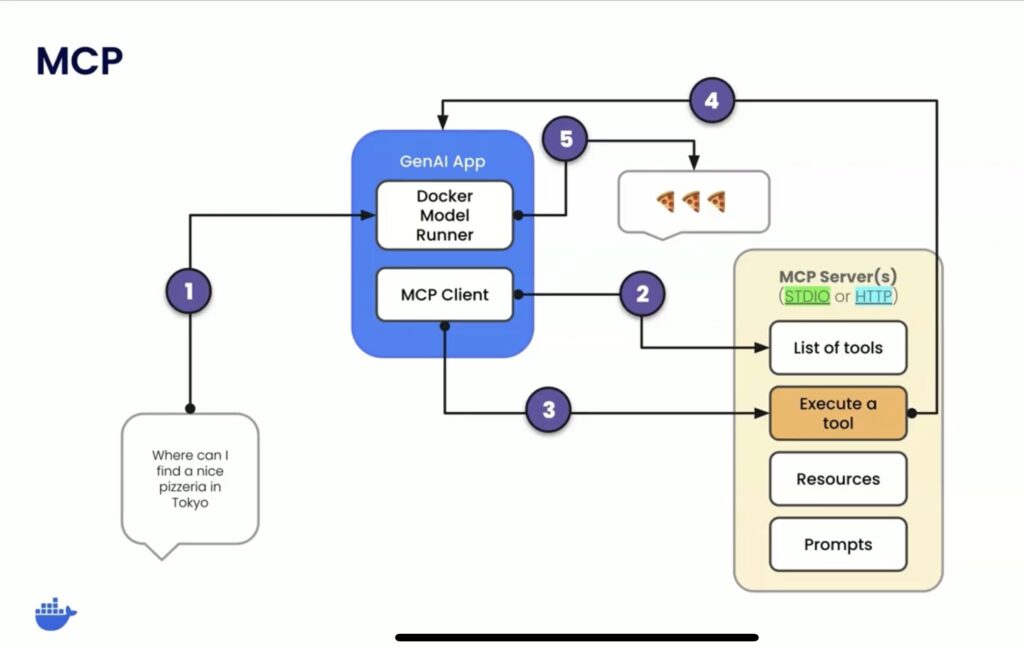

Mike explored function calling, where LLMs invoke tools (like weather APIs) as needed. He introduced Docker’s MCP Catalog, which contains 170+ MCP servers for easy, secure deployment. Challenges like managing dependencies and secrets are addressed through containers and Docker Secrets.

Fronting multiple servers with a single endpoint, integrating with tools like VS Code Copilot or GitHub. Mike created a repo live using voice commands, showing seamless Docker AI-agent workflows.

From the session, attendees learned:

This webinar builds on WeCloudData’s knowledge in AI infrastructure. Our programs cover Docker in courses like Introduction to Docker, part of broader tracks: Data Engineering, Machine Learning, and AI. Graduates often join elite teams, showcasing projects that reflect Mike’s demos. To watch the whole session, watch it on WeCloudData’s Youtube channel here: Docker Getting Started with AI Development.

If Mike’s session sparked your interest, reserve your spot in upcoming WeCloudData events or enroll in our bootcamps. Our career-focused offerings include:

A fun fact revisited: Mike’s light shows remind us that creativity drives innovation. Much like WeCloudData’s capstones turn ideas into portfolios.

Don’t miss out visit weclouddata.com to start your journey. Whether you’re a beginner or enterprise AI, WeCloudData provides the tools, knowledge, and network to succeed in a data-driven world.

"*" indicates required fields

WeCloudData is the leading data science and AI academy. Our blended learning courses have helped thousands of learners and many enterprises make successful leaps in their data journeys.

"*" indicates required fields

Canada:

180 Bloor St W #1003

Toronto, ON, Canada M5S 2V6

US:

16192 Coastal Hwy

Lewes, DE 19958, USA