Blog, Learning Guide

Building strong models or innovative solutions is only half the battle in today’s data-driven world; the other half is…

by WeCloudData

May 14, 2025

Blog

The blog is posted by WeCloudData’s student Sneha Mehrin.

This article is a part of the series and continuation from the previous article where we build a data warehouse in Redshift to store the streamed and processed data.

Let’s briefly review our pipeline and the schedule of the batch jobs that we developed.

We already covered the streaming and batch jobs in aws, now let’s move on to Einstein Analytics.

In our technical design, we are connecting EA to a a Redshift cluster using the Redshift Connector provided by EA and registering into a dataset using DataFlow.

Please note that this article is written keeping in mind that readers already have some idea of how to get started with Einstein.

Since, I already deleted the redshift cluster to avoid any costs, the nodes are showed with warning. However, the process remains the same.

Dashboard has three main components

These are compact number widgets with the static date filter binded to it in the filter section.

Logic behind this is quite simple; only additional configuration these visualisations have are the bindings.

New Questions : Unique(question_id)

Average Answer count : avg(answer_count)

Average View Count : avg(view_count)

Average Score : avg(view_score)

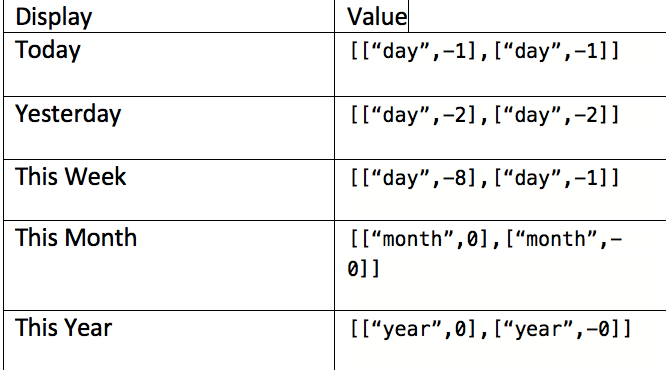

Here is the snippet of the bindings in the filter section encoded in the underlying json of the dashboard.

These visualisations do not have any bindings, but it has an additional metric such as % of growth in the tooltip.

Here is a quick video on how I configured these charts.

Since the visualisation can have only measure, the key tip is to create % of Growth using compare table and then hide it, so that the query projects it ,but it doesn’t render in the visualisation. You can then use the hidden measure in the tooltip

Since these charts are essentially a new query, I used pages to provide a seamless experience of navigating between the two views.

I used SAQL for the underlying query of these charts.

Here is a quick video on how I configured this visualisation.

That’s all for this series!!

It was a true learning experience to build this pipeline. If you have any questions, please feel free to shoot me a message!!

"*" indicates required fields

WeCloudData is the leading data science and AI academy. Our blended learning courses have helped thousands of learners and many enterprises make successful leaps in their data journeys.

"*" indicates required fields

Canada:

180 Bloor St W #1003

Toronto, ON, Canada M5S 2V6

US:

16192 Coastal Hwy

Lewes, DE 19958, USA