Welcome back! In our previous blog, “From Zero to 🤗: Your First Hug with Hugging Face,” we introduced the Hugging Face ecosystem and its user-friendly tools for exploring AI models. Now, let’s take the next step and enter the world of Transformers. Hugging face Transformers are powerful models used for many natural language processing (NLP) tasks.

In this blog, we’ll learn about the transformers, explore Hugging Face’s Transformers library, and build a simple text classification model using Transformers. This blog is beginner-friendly, but still powerful. Let’s start with WeCloudData-The leading data and AI training academy!

Understanding Transformers

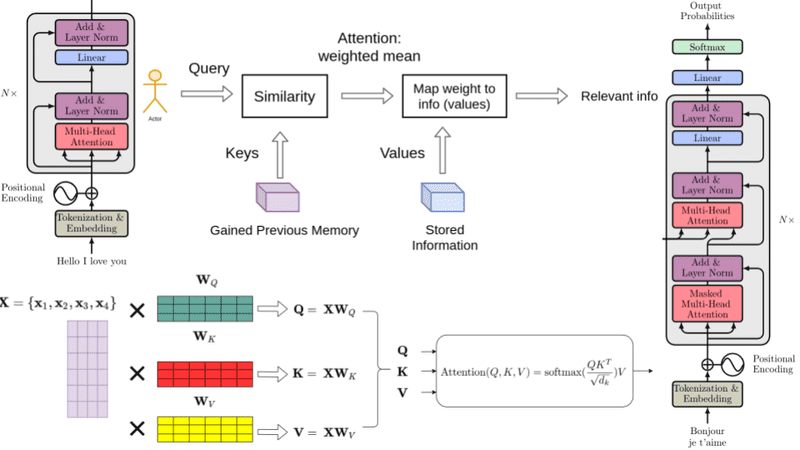

Transformers are a type of deep learning model first presented by Google in a research paper, “Attention Is All You Need.”. Transformer-based models are the backbone of many AI text-generation tools like ChatGPT. They are built on the encoder-decoder structure designed specifically for processing language. Transformers have revolutionized natural language processing by enabling LLM models to understand context and relationships in text more effectively than previous ML model architectures.

Key features of Transformers:

- Self-Attention Mechanism: Allows the model to weigh the importance of different words in a sentence, capturing context more effectively.

- Contextual Embeddings: The meaning of each word is affected by its context in the sentence, allowing the model to capture complex relationships.

- Parallelization: Transformers process all words in a sequence simultaneously, leading to faster training times.

- Scalability: They can be scaled up to handle large datasets and complex tasks.

To learn more about Transformer, explore these blogs: Complete Guide to Generative AI: Applications, Benefits & More, and Generative AI with LLMs: The Future of Creativity and Intelligence.

🤗 Hugging Face’s Transformers Library

Hugging Face’s Transformers library provides pre-trained models and tools to implement Transformer-based NLP tasks. It supports multiple frameworks like TensorFlow and PyTorch, making it versatile and a good option for many projects.

Key components:

- Pre-trained Models: provide access to thousands of models trained on diverse datasets.

- Tokenizers: Tools to convert text into numerical representations suitable for machine learning model input.

- Pipelines: High-level interfaces for common tasks like text classification, question answering, and more.

Step-by-Step Guide: AI Storyteller: GPT-2

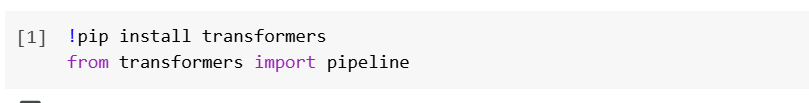

Step 1: Install required libraries

First step in our project is to Install the required libraries using pip. In this project we will use PyTorch , but Hugging Face also supports TensorFlow. The tool we use in this project are google collab, hugging face, and transformer.

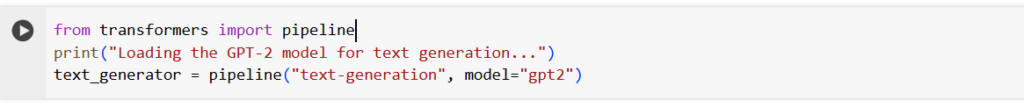

Step 2: Loading Our AI Writer

In this tutorial, we are exploring the text generation capabilities of transformers. We use Hugging Face’s library to load GPT-2 . GPT-2 is a transformers model pretrained on a very large corpus of English data in a self-supervised fashion.

We will give the prompt to GPT-2 it will generate the text according to our prompt. For this example our focus is on generating multiple stories from a given prompt.

What this code does

- First, the “pipeline “ function is imported from Hugging Face’s transformers library. It is a user-friendly shortcut provided by Hugging Face, allowing users to use powerful transformer models for tasks like text generation and classification.

- “text_generator” is the variable we’re saving the pipeline into, so we can use it later to generate stories.

- “Text-generation” tells Hugging Face what kind of task we want to do. In our case, the text generation task is to complete a sentence or write a story.

- “model=”gpt2“” means defining which transformer model is being used to complete the task

In plain English, you are just saying :

“Hey, Hugging Face, give me a tool to write stories using the GPT-2 AI model.”

Step 3: Define Prompt to Start Story

Prompts give an AI model a set of instructions. It sets the tone and context for the story. Prompt help AI to understand what kind of story you want, its characters, setting or it the style.

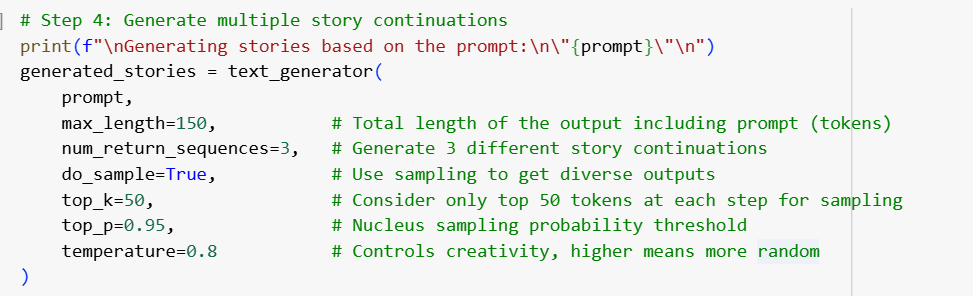

Step 4: Generate multiple story continuations

We want three stories with a single prompt. This single chunk of code is doing a lot of powerful stuff in one go. We are telling AI that “This is the prompt for my story. Now, provide me three versions for this story, each of which should be captivating, a bit random, and about 150 words long.”

In result the model give back three unique story completions. It’s like asking three different authors to finish the same sentence, each will go in their own direction, context ,and explanation.

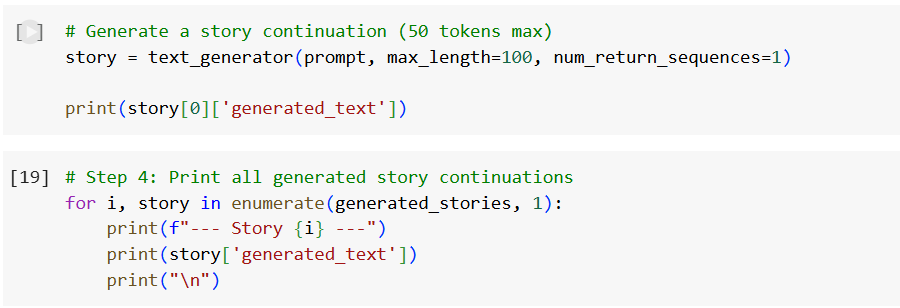

Step 4: Printing Generated Stories

It’s the final step of the pipeline where we get the output from the transformer. In the previous step, we request the transformer to generate the stories. These stories are now stored inside a list named “generated_stories.” That list has a dictionary for each item, with ‘generated_text’ as key, which is the actual content of the story.

In simple terms, it means:

“Let’s read each of the stories the AI has neatly written for us, much like turning the pages of a short storybook. “

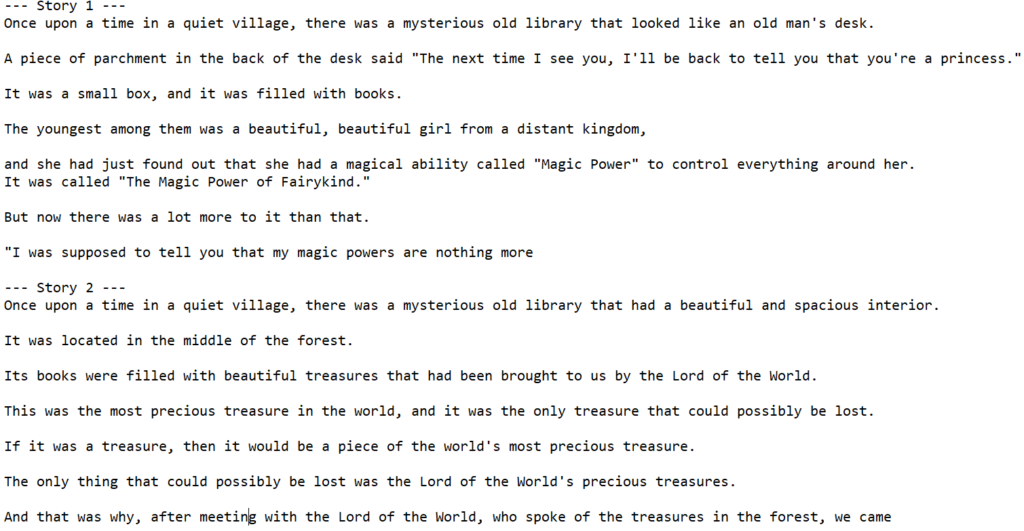

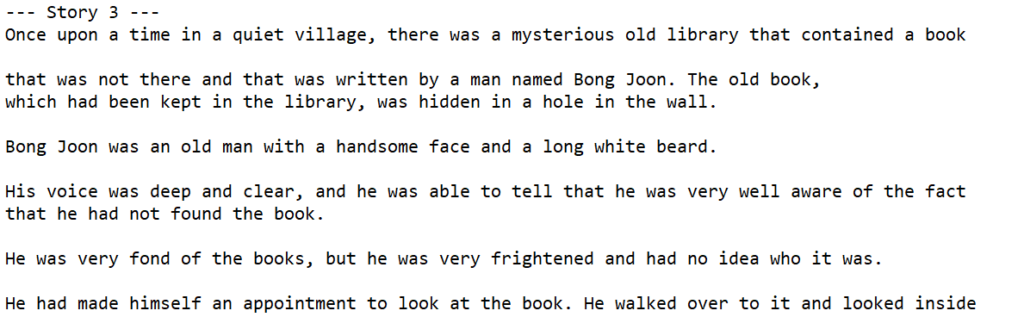

The Stories Generated by Transformer

Try it by yourself and improve the result.

Your AI Journey Starts Here!

We’ve only just scratched the surface of what’s possible with Hugging Face. This platform makes AI enjoyable, accessible, and powerful for everyone, regardless of background or expertise.

If you’re passionate about data, AI, and real-world skills, be sure to check out WeCloudData, your launchpad into the future of tech.

What WeCloudData Offers

- WeCloudData’s Corporate Training programs are aims to meet the needs of forward-thinking companies. With hands-on, expert-led instruction, our courses are aims to bridge the skills gap and help your organization thrive in today’s data-driven economy.

- Live public training sessions led by industry experts

- Career workshops to prepare you for the job market

- Dedicated career services

- Portfolio support to help showcase your skills to potential employers.

- Enterprise Clients: Our expert team offers 1-on-1 consultations.

Join WeCloudData to kickstart your learning journey and unlock new career opportunities in Artificial Intelligence.