Blog

Welcome to the third blog in WeCloudData’s Prompt Engineering Series! A famous software design principle by Robert C. Martin…

by WeCloudData

January 25, 2025

Blog

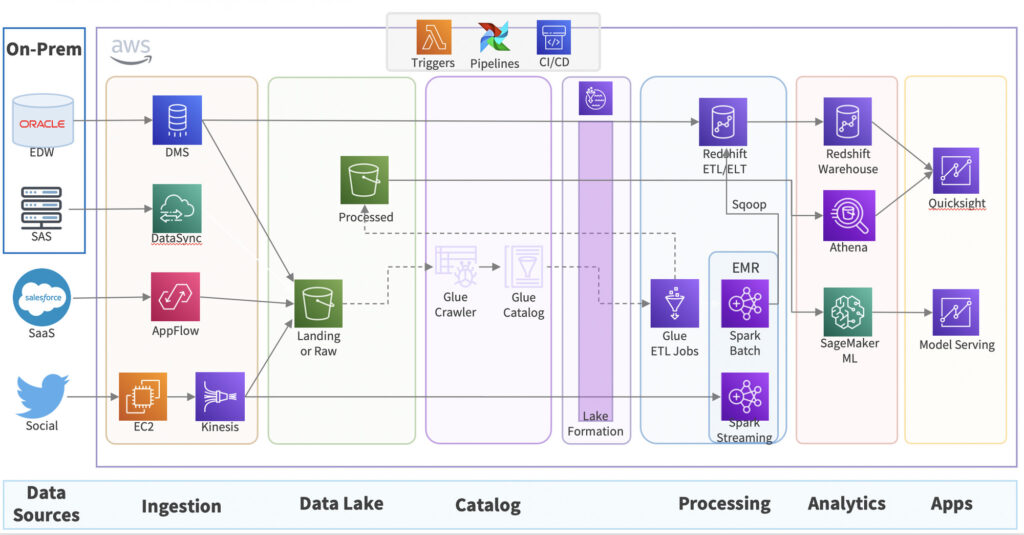

Data engineering is a hot topic in recent years, mainly due to the rise of artificial intelligence, big data, and data science. Every enterprise is transforming in the direction of digitalization. For enterprises, data is full of infinite value. For all the data requirements of organizations, the first thing they need to do is to establish a data architecture/platform and establish a pipeline to collect, transmit and transform data, which is what data engineering does.

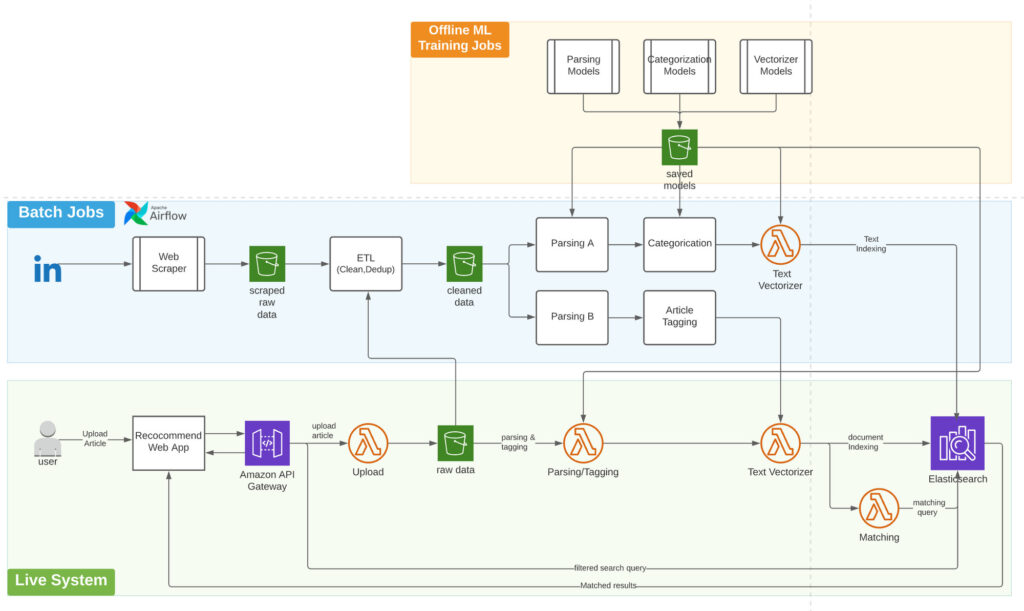

In a Data Engineering project , data is collected, organized, processed, distributed and stored. For example, before starting an AI and Data Analysis project, Data Engineers first need to connect to various data sources to collect data, then work on data transmission and transformation, and finally store the data in a designated place(e.g. a data warehouse) , in a designated format(e.g. a database). We call the full process “a data pipeline”. From the endpoint where the final data is stored, the AI or Data Analysis team will build their connection to the data and start their data activities. In many cases data engineering also automates this data pipeline.

Data engineering is a hot topic in recent years, mainly due to the rise of artificial intelligence, big data, and data science. Every enterprise is transforming in the direction of digitalization. For enterprises, data is full of infinite value. For all the data requirements of organizations, the first thing they need to do is to establish a data architecture/platform and establish a pipeline to collect, transmit and transform data, which is what data engineering does.

Data Engineering is a field that has been growing rapidly in the past few years. The google trend above speaks for itself. You must have lots of questions about data engineering. For example,

This article explains what data engineering is and introduces some useful use cases.

Data Engineering is the process of collecting, ingesting, storing, transforming, cleaning, and modelling big data. It is properly governed by people and process, driven by modern tools, and adopted by data engineers to support data & analytics teams to drive innovation.

It is safe to say that anything that’s data related has something to do with data engineering. And most importantly, all companies that invest in data science and AI need to have very solid data engineering capability.

The following are important aspects of data engineering.

Data collection is the process of collecting and extracting data from different sources. Most companies have at least a dozen different data sources. Data can come from different application systems, SaaS platforms, the Internet, and so on.

When data gets collected from the source, it needs to be ingested into a destination. We can save the data as a CSV file on a server, however a well designed system will take many things into consideration:

Data storage is a big topic. Depending on the use cases of the data, data engineers may choose different storage systems and formats. For example, analytics engineers and data analysts may prefer data to be stored in a relational database (RDBMS) such as Postgres or MySQL, or a data warehouse system such as Snowflake, and Redshift. Big data engineers may want the data to be persisted in a data lake such as Hadoop HDFS, or AWS s3. Modern data engineers may even want the big data to be stored in a Lakehouse.

Data can also be stored in many different formats, from flat files such as CSV, to JSON and Parquet. In the database world, data can be stored in a row-oriented or columnar-oriented databases.

Most data systems have a staging environment where raw data can be prepared before loaded into the destination systems. At this stage, data will go through a series of transformations to meet the business, compliance, and analytics needs. This is a stage where data engineers spend a big chunk of their effort on data transformations. Common data transformation steps include:

There are many tools that are leveraged in this stage by data engineers. Depending on the data processing platforms, data engineers may use tools such as:

Data engineers usually build data processing DAGs (Direct Acyclic Graph) that have dependencies on each other. In order to build high-quality data applications, data pipelines should be as automated as possible, and only intervened when parts of the lineage break that requires a data engineer’s attention. It turns out that data pipelines can become quite complex and unwieldy. It needs to be well-tested and software engineering best practices also need to be adopted by data engineers.

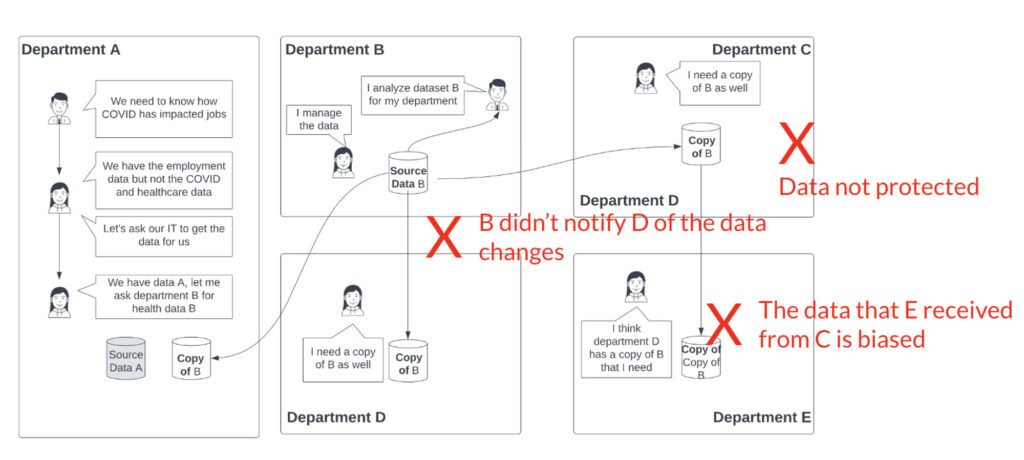

Data quality is a big part of enterprise data governance effort. While building a data pipeline, data engineers always need to include data quality checks so that the pipeline doesn’t run into Garbage in, garbage out problems. A small issue in a pipeline may result in very bad data quality and therefore ruin the entire data science and analytics effort.

Data governance is not just a technique. It involves people and processes. Companies assign data owners and stewards to come up with governance policies. Data quality and monitoring processes are essential parts of the process.

In the past few years, we’ve started to see the convergence of the engineering disciplines. Modern data engineering requires skill sets from many disciplines. For example, in some companies a data engineer may need to know DevOps to certain degree so that she/he can write well-tested and automated data pipelines. Data engineers are often writing production-grade software code such as data connectors and pipelines. In many organizations, data engineers may also need to do basic analytics, build dashboards, and even help data scientists automate machine learning pipelines.

At WeCloudData, we often hear this from our students. Can data engineering be automated? The answer is: of course! And it should be automated as much as possible.

Automation is happening in every industry with the advancement in AI. For example, ChatGPT can probably write a data pipeline script by simply predicting the next word. Github copilot is already powering a lot of developer and software engineering’s daily work.

In recent, we also see many projects and startups building GUI-based data engineering tools, which make building data engineering a low-code or no-code effort.

However, from WeCloudData and many experts’ perspective, because producing high quality data for analytics and AI is so crucial for a company’s innovation, companies should and will rarely completely rely on code generated by bots and AI. While many low-level code should be automated, the business logics are often hard to automate. For example, banks using exactly the same tools and systems will end up building very different data engineering pipelines due to the differences among legacy systems, talent, budget, existing infrastructure, internal policies, and business logics.

We’d like to point out that data engineering is actually not new concepts. It has been around for as long as data systems exist. However, it may have lived under different names. For example, data engineers might be called ETL Developer in a bank, or Big Data Developer in a tech company, Data Warehouse Engineer or Data Architect in an insurance company, or simply software engineer in a data-driven startup. The reason why we call it traditional data engineering is because the field has evolved so fast it the past 8-12 years that many old technology stacks are really used or are being phased out in many companies. The data industry is abundant with tooling choices and companies are constantly looking for better ways to scale and manage their data engineering efforts.

While the fundamentals of data engineering haven’t changed dramatically in the past 10 years. The tools and ecosystems have grown tremendously. For example, Apache Spark has dethroned MapReduce to become the king of big data processing. Many companies that have invested heavily in data lake are now realizing that they need to chase the next wave of Lakehouse initiatives while keeping running the legacy big data systems.

There’re a several data data engineering trends to watch out for in the coming years:

A data warehouse is a centralized location for storing a company structured data. It usually stores product, sales, operations, finance, as well as marketing and customer data. Raw data will be extracted from data sources, properly cleaned and transformed, modelled and loaded into the data warehouse. An enterprise data warehouse powers most of the business intelligence and analytics efforts and therefore is a critical piece of data infrastructure.

For example, the sales team may want to understand daily/weekly sales performance. Traditionally, IT may help write SQL queries to prepare the data for ad-hoc reporting. However, the prepared data may have useful information that can be leveraged by marketing, sales, as well as product teams. Therefore, having a data engineer prepare the data and load the data into a data warehouse that can be access by different teams will be very valuable. Different teams will access a single source of truth so data interpretation can be as accurate and consistent as possible.

The answer is simple: everyone should.

It is important that company’s executives understand the importance of data engineering. It’s directly related to budgeting and companies should invest in data engineering as early as possible. As the data systems and problems become more complex, the cost of data engineering and architect mistakes become higher.

Data Scientists and analysts should have a solid understand of common data engineering best practices. There’s a culture in many organizations and among data scientists that data should always be prepared by data engineers. We believe that data scientists need to know how to write efficient data processing scripts, and rely less on data engineers to prepare data. Though data scientists don’t need to write and automate many data pipelines as data engineers do, acknowledging the importance of writing efficient and scalable code is important.

Software engineers and developers write web/mobile applications that generate raw data. They usually work with data engineers to collect and ingest data into data lakes and warehouses. Frequent communications are required between developers and data engineers to sync up on source data structure changes so make sure impact on data ingestion pipelines are minimized when changes happen.

IT will often need to work with data engineers to provide source data connection details, providing access to infrastructure, and respond to other internal requests. It’s important for IT professionals to understand common data engineering workflows.

We introduced that data engineering as the process of collecting, ingesting, storing, transforming, cleaning, and modelling big data. Data engineers are the talents that are mainly involved in this process. Let’s break it down and give more specific examples.

Depending on the data sources, data engineers work with different tools to extract data.

Data engineers work with many tools for data ingestion. Which tool to use usually depends on the company’s existing data infrastructure and project goals and budget.

Data engineers also need to work with many different storage systems:

Data engineers should understand the differences among different database engines and their particular use cases and help the company choose the right databases for storage. This is sometimes what data architects would do in bigger companies.

Data transformation is where data engineers spend the bulk of their time. Data engineers work with tools like SQL, Spark, and Lambda functions to transform the data in batch or real-time.

Data transformation is important because only when business logics get applied to the process will the data generate real business value. This is where we see significant differences among companies.

Data transformation can happen in different places.

Data engineers working on ETL/ELT need to have very solid understanding of data modelling for RDBMS and NoSQL engines.

ETL/ELT are very complex processes. The code base can get hairy and hard to control as the project grow, especially when the business logics are complex. In some large systems, data engineers may need to deal with thousands of database tables and without automation is can be quite daunting tasks.

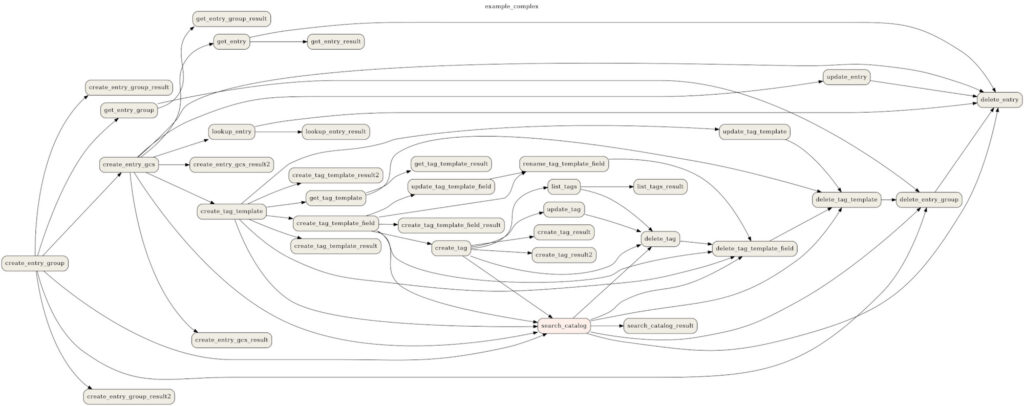

Data processing units often times have dependencies on each other. For example, a data pipeline that processes one massive transaction table might need to be used by several other downstream processes. Instead of building separate pipelines and re-process the hour-long data pipeline, it makes sense for all downstream pipelines to depend on the same pipeline that produces the transaction table. This creates a DAG (Direct Acyclic Graph) and data engineers will need to build and maintain the DAGs for complex data processing needs.

Data engineers will leverage platforms such as Apache Airflow, Prefect, or Dagster to build and orchestrate pipelines. Some of these platforms can be orchestrated on Kubernetes clusters so data engineers also need to have basic understanding of containers and container orchestration.

You probably notice that when we introduced data engineering, we didn’t really mention statistics, math, and machine learning. That is one of the major difference between data engineering and data science. Data engineers mainly focus on building data pipelines, data ingestion, and preparing data for data analytics projects and don’t need to build machine learning models. However, data engineers work very closely with data scientists and share a set of common skill sets. Data engineers sometimes also need to have software engineering and devops skill sets.

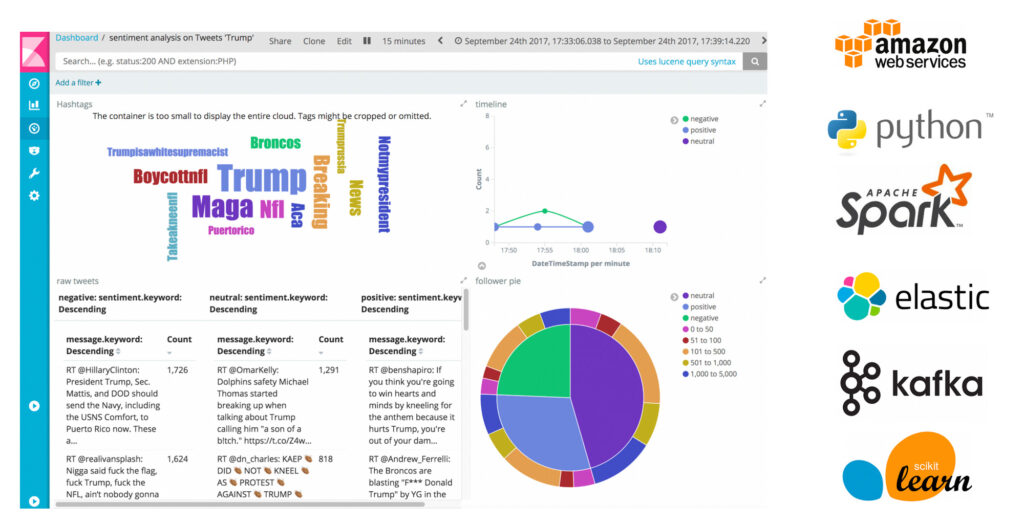

When you look at WeCloudData’s data engineering bootcamp curriculum, you will notice that we cover a lot of cloud computing knowledge. We not only teach students how to work with data but also cover important aspects of data and cloud infrastructure. Both DevOps Engineer and Cloud Engineer need to work with cloud infrastructure as well. Here’re the similarities between data engineers and DevOps/Cloud Engineers

In big tech companies, a lot of data engineers actually come from software engineering background. Some of these are data-savvy engineers and developers. Data engineers and software engineers sometimes need to work closely to define the data format used for extraction and ingestion purpose. Any upstream changes on the software application side such as adding new tables, changing table structures, or adding/removing fields need to be communicated properly with data engineers who work on data ingestion.

Truth be told, data engineers don’t get the same glamour as data scientists do. DS and DA work more closely with the business teams such as product, marketing, and sales. Business teams are always hungry for data and insights to drive better decisions. Therefore, data scientists and analytics are more likely to get the recognition.

Data engineers are the unsung heroes. But many organizations today are starting to realize the importance of data engineers. In the U.S., senior data engineers earn higher salary on average compared to DS and DA jobs. Many startups are hiring data architect and engineers before hiring data scientists. We created the diagram below to help you understand how different teams collaborate and the importance of data engineers.

Let’s start from the bottom of the diagram.

Hopefully this section explains the importance of data engineers. One suggestion WeCloudData would like to give is try to develop crossover skills. Whether you want to specialize in DS, DE, or MLE, it’s always helpful to learn some skills from other roles.

What does a typical day look like for a data engineer? Well, it varies and depends on what type of data engineer we will talk about. For this section, let’s have a look at a typical day in the life of a data engineer working on data lake projects. Note that not all data engineers work in the same way so take it with a grain of salt.

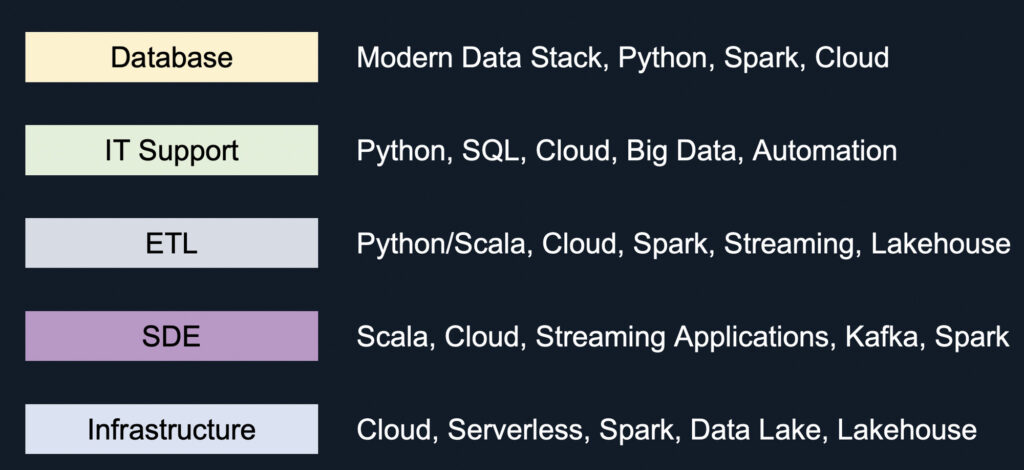

If you’re about to embark on a data engineering career, make sure you understand that there are different types of data engineers and depending on the teams, the scope of their work may be very different.

We got this questions often: what is the difference between a data architect and engineer?

Well, while a data architect may come from a data engineering background, he/she primarily work at the strategy level. A data architect spends less time doing actual hands-on implementation but they know enough (many are very experienced) about different tool stacks, data governance, and data architecture so that they can help the organization set directions on tooling, high-level data infrastructure planning and data systems design. Data engineers on the other hand spend more time on the executions. Let’s dive in and discuss the different types of data engineers.

In this section, WeCloudData categorizes data engineers into four different types:

We hope that this article helps you understand what data engineers are and what they do on a daily basis. If you liked this article, please help us spread the word.

Understanding the career path of a data engineer is important before you kick start your new career. The good news is that data engineers have many career path options. We’ve seen people going down different paths and be successful and happy with their jobs.

Data engineers spend most of their time heads down working on implementation, be it a data pipeline or spark job optimization. As the role becomes more senior, the role will get more involved in architectural design meetings and business meetings. Time will also be allocated to mentoring other junior data engineers. A lead data engineer will also be setting the project roadmap along with the leaders and carrying out larger scope data projects. If you want to go down the technical career track and become the staff engineer eventually, be prepared to:

As the data engineer moves up the career ladder and take up more responsibilities, he/she will start to spend less time on execution and more time on mentoring, business communication, and management. A manager’s role is not the best fit for everyone. Many engineers would avoid becoming a manager so that they can focus on the technology side. However, the persons with the right mindset and motivation will make the transition and thrive in a leadership role.

WCD’s suggestion for those who would like to work in tech leadership roles is that you need to ask yourself the following questions:

Talking to a mentor who has been in tech leadership roles can be very helpful. Once you’re sure, start preparing early. Opportunities are there for those who are prepared.

It’s not uncommon to see data engineers going down the data science and analytics route. Data engineers usually work on the data ingestion and ETL parts of the data pipeline but they don’t do a lot of analysis of the data. Since data engineers share a common set of skills such as Python, SQL, AWS, and Spark, it’s not a very big jump from a technical perspective.

However, data engineers will need to put more effort into statistics and machine learning so that they have the advanced analytics skills required for the DS job.

Data Engineering requires less statistics, math, and machine learning. The requirements for coding is higher, data engineers need to be comfortable writing production-grade code. Data transformation functions need to be properly tested. And data engineers also need to have an architect-level view of the entire data pipeline and make sure things run smoothly in production.

Software engineering can be a good career path for data engineers as well.

Data engineers and web developers share similar skills but the two roles are quite different. For example, a full-stack engineer job will require the knowledge of front-end and back-end development. A data engineer role doesn’t overlap much with it and data engineers will need to learn new skills such as Javascript, React, and/or Node in order to work in web development.

Data engineers have skills more similar to backend software engineers. For example, both need to know RDBMS, NoSQL, Python or Java, APIs, etc. If a data engineer wants to become a software engineer later to work on building data-intensive software application, he/she will need to learn the fundamentals of software development lifecycle and system design.

Another interesting path to go down is the tech evangelist route. If you love new technologies, have worked on many different types of projects using different tools and platforms, and love communications and community building, the tech evangelist role might be a good fit. Take the big data world for an example, when new technologies such as Hadoop, Spark got open sourced, many startups were created for that specific technology in the ecosystem. Companies will need very good tech evangelists who come from a technical background and who can help advocate the technologies and build up the developer ecosystem.

With so many businesses going through digital transformations, the demand for data consultants become increasingly high. Many companies don’t have the budget to own or experience to run a data engineering and science team. But they still have interesting data problems. Companies that want to collect more data for advanced analytics also want some experts’ help on laying the data infrastructure, migrating legacy systems to the cloud, and building the data pipelines. This is where consultants step in and provide lots of value.

One suggestion WeCloudData would like to give anyone who wants to work on data engineering consulting is that you need to become specialized in certain areas. Companies hiring consultants are usually looking for specific skills. As a consultant, you also need to learn and adapt to new skills very quickly.

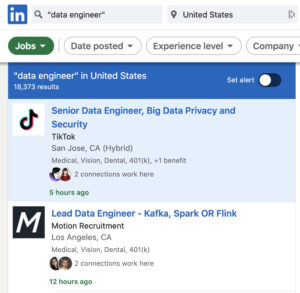

Data engineer jobs are in very high demand. Though the job market demand is seasonal and fluctuates a lot throughout the year. The overall trend is very encouraging. A quick search on LinkedIn (as of Feb 6, 2023, after the recent tech job cuts) shows 18,373 results for data engineer jobs. Of course, the matching results may include jobs that are not strictly data engineer roles but the relative scale speaks for itself.

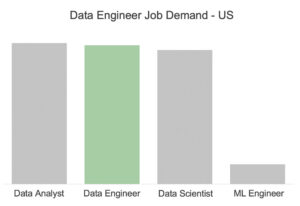

Compared to data analytics and data science jobs, job demand of data engineers is also usually on par! Many companies have started to realize the importance of data engineering and that’s great news for career switchers.

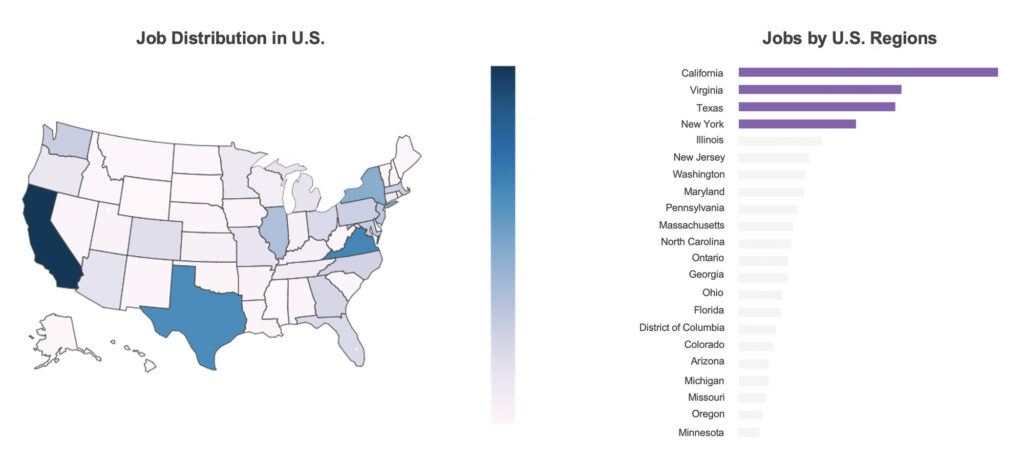

If you zoom in on the region level, in the United States, data engineer job demand come from many different states, with a concentration on California, and Virginia, Texas, and New York.

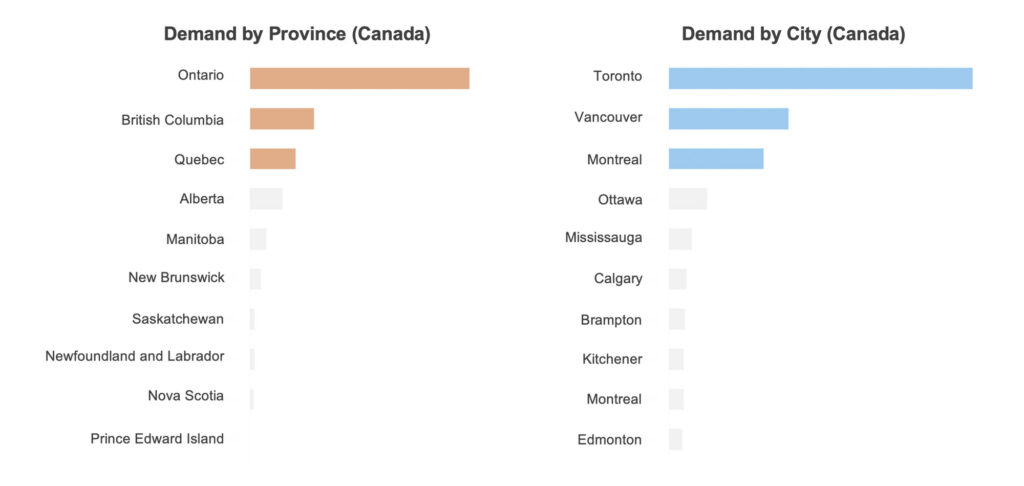

In Canada, the Greater Toronto Area accounted for a large portion of data engineering jobs, followed by Vancouver and Montreal areas.

While data engineer jobs are in high demand, it usually requires more experience. Based on WeCloudData’s research and our experience working with different hiring partners and recruiters, we came to a conclusion that in the current job market, and as more companies go through digital transformation, senior roles are in higher demand.

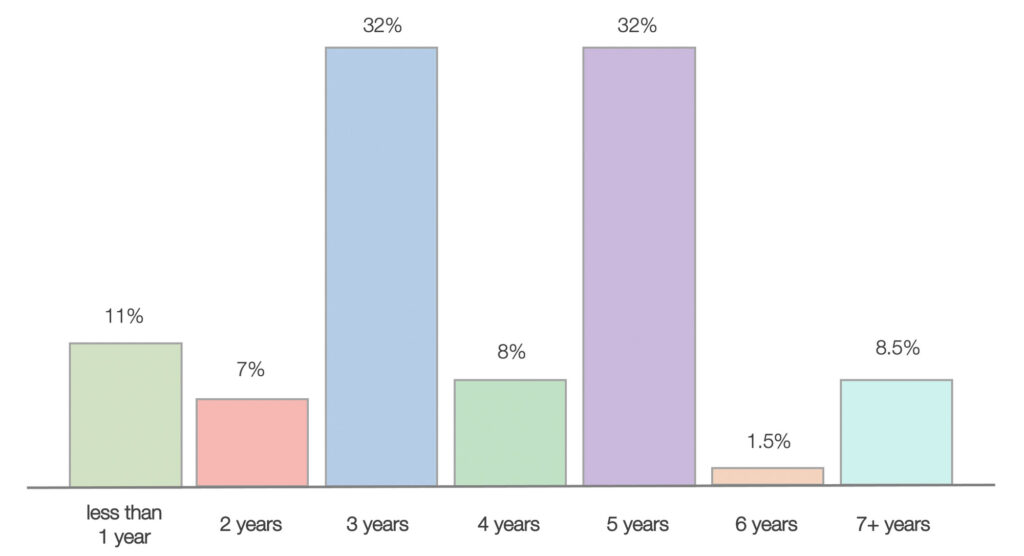

After collecting thousands of jobs from recruiting websites, we did some analysis on the years of experience required for DE jobs which validates our assumption. The chart below shows that 32% of the DE jobs require mentioned 3 years of experience, and usually in job description

Data Engineer Jobs: Distribution of Work Experience Requirements

However, it doesn’t mean that junior data engineers are not able to find jobs. WeCloudData’s recommendation to career switchers are as follows:

As enterprises pay more and more attention to data, the market demand for data engineering continues to rise. Data engineering is usually the first priority for companies entering the data world. Therefore, in recent years, the market demand for data engineering is very high and has caused a shortage of data engineering talents.

The demand for Data Engineers is the growth in big data engineering services provided by consulting firms like Accenture and other tech companies like Cognizant. The global big data and data engineering services market is certainly experiencing high demand. Growth estimates from 2017-2025 range from 18% to a whopping 31% p.a.

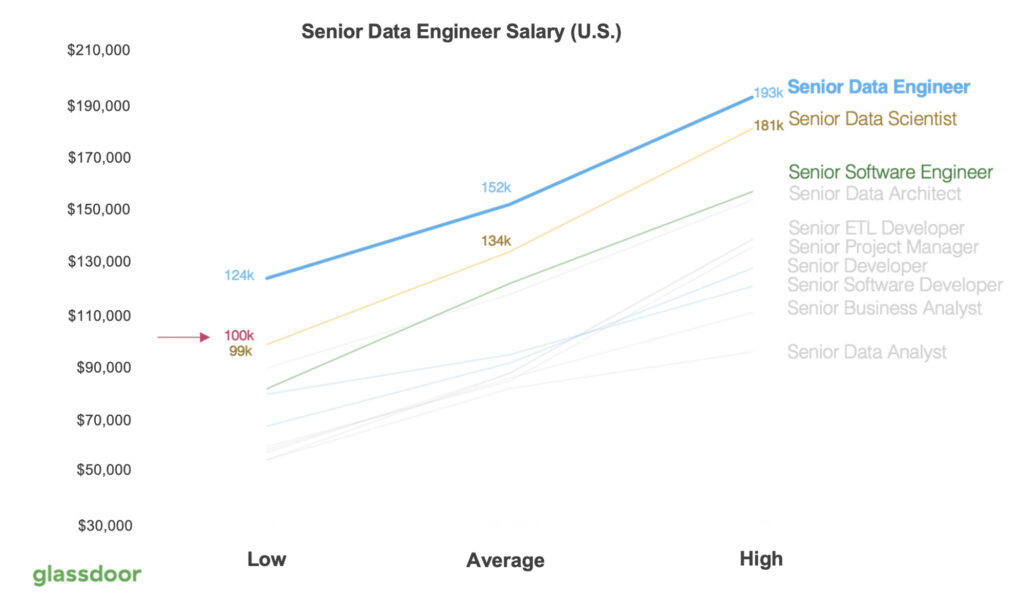

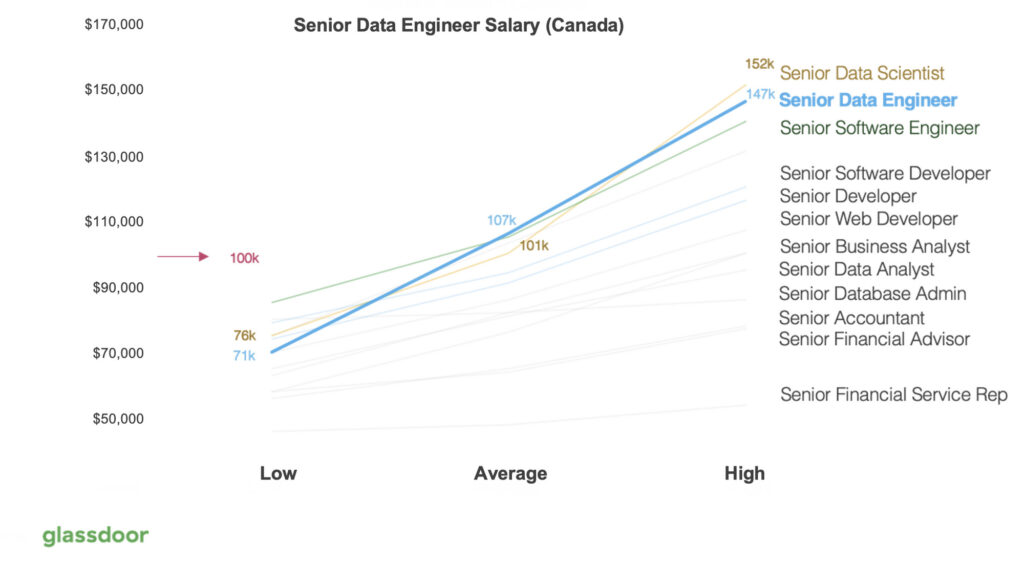

In the mean time, the salary of the Data Engineer is increasing faster than other similar positions. The following charts are the salary distribution of the Senior Data Engineers in the U.S. and Canada.

As you can see, in the U.S., senior data engineer has an average salary of $152,000 USD, and even higher than the average salary for data scientists. Take the data with a grain of salt because this may vary a lot depending on the companies and industries. With signing bonuses and stock options, data engineers joining big tech may get paid well over $200,000 in the first year.

The average base salary of an intermediate level Data Engineer in Toronto is $91,497, according to Glassdoor’s 2022 market data. Here is a breakdown of the Data Engineer salaries by level:

| Level | Average Base Salary (CAD) |

|---|---|

| Junior Data Engineer | $81,923 |

| Intermediate Data Engineer | $91,497 |

| Senior Data Engineer | $121,241 |

| Lead Data Engineer | $ 125,567 |

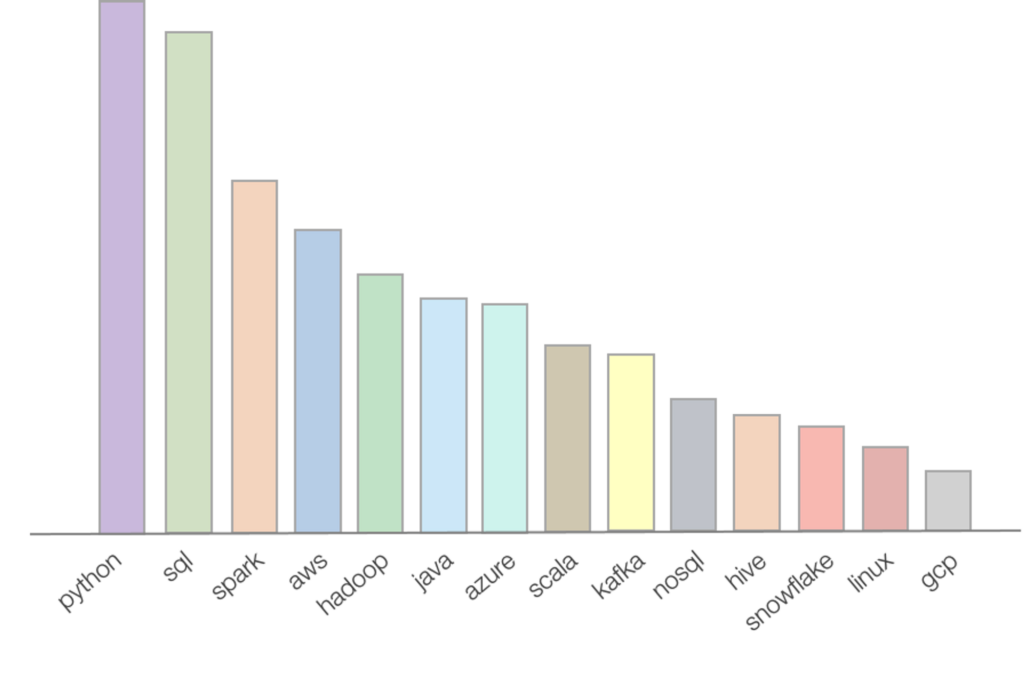

If you do some research on Google regarding top skills of a data engineer, you will be surprised to see how many skills a data engineer needs to know! Based on WeCloudData’s research (analysis done on thousands of job postings), top skills that appear most frequently on data engineer job descriptions are:

The actual list is quite long and for beginners that can look quite daunting. To make the matter worse, these tools cover different aspects of data engineering and it’s rare to have someone learn everything. It usually takes years of working on different types of DE projects to achieve that.

One of the mistakes frequently made by learners is chasing the tools. Warning ahead! Tools are just tools and one should not learn them for the sake of learning. Listing 30+ tools on the resume is going to cause more harm than good.

The best way to learn data engineering is to follow a structured curriculum. WeCloudData’s data engineering bootcamp has open sourced its curriculum. In this guide, we will discuss it in detail.

Following a structured curriculum has the following benefits:

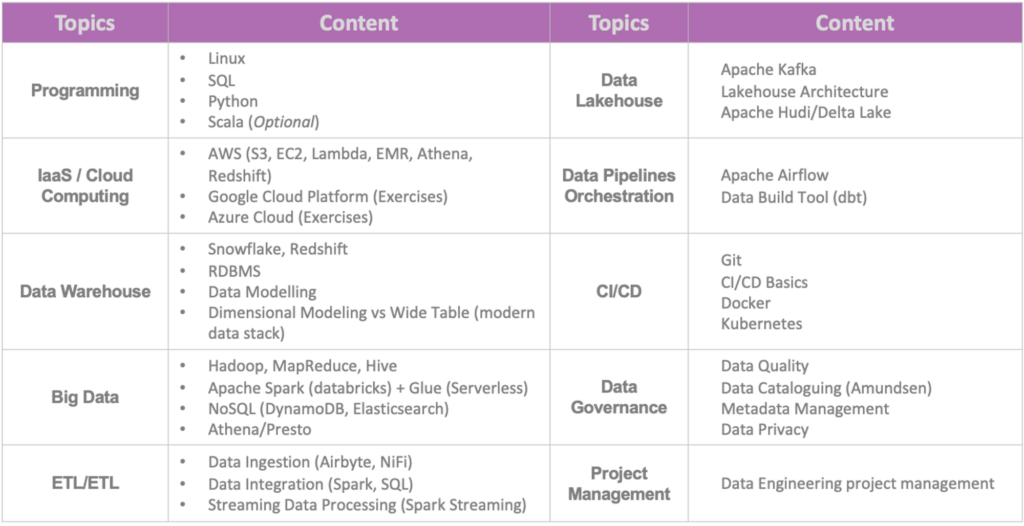

Here’s a list of topics that WeCloudData’s data engineering bootcamp covers:

Before jumping into advanced topics of data engineering such as distributed systems, ETL, and streaming data processing, it’s important to have the fundamental building blocks of data engineering developed. For any data jobs, SQL and Python are always the most important skills to grasp. For data engineers in particular, linux commands and git are also key skills to learn.

One interesting thing about data engineering is that there are so many different tools that can be applied to solve the same problem. We’ve seen many online tutorials covering tools at the superficial level so learners end up knowing a bit of everything. But when it comes to job interviews, many candidates lack the necessary experience dealing with real data and therefore failed to pass. For example, a candidate may know spark dataframe syntax very well, but doesn’t know how to use the tool to process complex data joins and aggregations.

For many real-life data problems, the data skill is actually more important than the coding skill. That means the candidate needs to know different types of SQL functions and statements, knowing query performance tunings, as well as database internals. A data engineer will need to have the skills of joining 10 tables in one query, navigate complex entity relationships while maintaining the quality of code and correctness of the query output. To achieve this takes more than just learning the tools and syntax. It takes real hands-on project implementation experience. Different industries also have different types and shapes of data, ingested and stored in different ways, and processed with different business logics.

From a programming tool perspective, Python or Scala are recommended for data engineers. WeCloudData suggests learners to focus on one programming language, get good at it before moving on to another programming language. For learners from non-tech or DA/DS background, we recommend starting from Python first. For learners from CS and development background, picking up both Scala and Python shouldn’t be a big challenge.

Let’s now dive into the details and discuss the important fundamental skills to grasp for data engineers.

SQL is the Structured Query Language that is used to interact with relational databases. It’s a common language shared by many different database engines. Different dialects exist but most of the SQL syntax look the same across different database engines.

SQL is probably THE most important tool to grasp as a data engineer. It’s probably even more important than Python in most of the DE roles.

Data engineers write SQL queries to retrieve data from databases, use SQL functions to transform data in the database, and use JOIN operations to merge data from different sources, and use GROUP BY operators to aggregate data and generate insights. Most of the ETL/ELT pipelines are written in SQL and executed in RDBMS or other processing engines like Spark.

Here’re some suggestions from WeCloudData on learning SQL:

Python is currently the king of data programming language. It’s always worth your effort to learn Python at an intermediate to advanced level. To get prepared for a data engineering career one should at least have intermediate level knowledge of python programming.

Linux is also an important skills a data engineer needs to be good at. There’s no need to become a Linux OS expert. Data engineers just need to know enough commands so that he/she can deal with AWS CLI, run python scripts and work with docker containers in the command line.

Some of the linux OS and commands to be familiar with include:

Git and github are important skills a data engineer needs to grasp as well. Knowing how to version control scripts and pipeline DAGs is important because data engineers often collaborate with other engineers on building complex pipelines.

Some of the basic Git knowledge that are nice to have include:

While more and more companies are moving their data infrastructure to the cloud, AWS/GCP/Azure skills become indispensable. While data engineers don’t need to have the same level of knowledge as a cloud engineer, they need to have working experience of some of the data related cloud services very well.

One common questions WeCloudData get from students is which cloud platform should they invest time in. We think it doesn’t matter unless the company has very specific requirements for which cloud platform to use. Experience with one platform is pretty transferrable to other platforms so it shouldn’t take long for one to adapt to new platforms.

Keep in mind that most of the time data engineers are end users of cloud services. Big companies will have platform engineers or DevOps teams to set up data infrastructure so data engineer will focus on processing the data. However, in smaller organizations data engineers may need to roll up their sleeves and manage infrastructure as well.

Some of the AWS services we suggest aspiring data engineers to learn include:

At the beginning of your learning journey, you should probably only begin with EC2 and S3. As you go through the curriculum, you can pick up AWS services one by one. Learning under context is always more effective. For example, learn RDS when you start to embark on the database section and try to run your SQL database queries on a managed RDS instance.

After you’ve learned the data engineering fundamentals, it’s time to move on to specialization topics. Currently, the data engineering field has a couple trends:

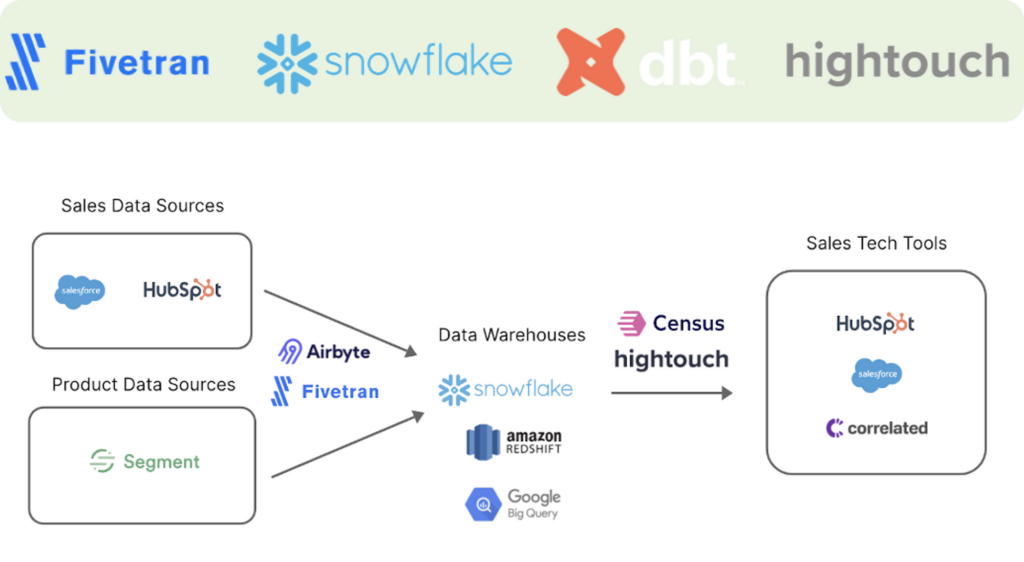

Analytics engineers mainly work with the modern data stack. SQL in recent years is having a big comeback. It’s partly driven by the innovation in the database world. For example, Snowflake data warehouse engine separates storage from compute and makes the cluster super easy to manage. The modern data ecosystem evolved around it and it’s quite common to see companies investing in similar tool stack:

To master the modern data stack as an analytics engineer, one needs to be familiar with the following concepts

The modern data stack is mainly running on relational databases. More specifically, it favours data warehouses. However, databases such as Postgres can be used as well. A data engineer needs to be very familiar with relational databases such as MySQL, Postgres.

Some of the important database concepts to grasp include:

Data warehouse is a complex system that centralizes a company’s important business data. It is primarily used for structured data but increasingly powered by modern database engines to store and analyze semi-structured and even unstructured data.

EDW is playing an essential role in enterprise business intelligence. Data from disparate sources are collected, transformed, and loaded into the warehouse for storage and queries. The way the data is modeled in the warehouse depends on how the business would like to query the data and therefore is critical for the performance and information access.

Modern data warehouse such as Bigquery and Snowflake separate storage from compute and thus making it very scalable. Instead of the traditional ETL and dimensional modelling approach, companies who follow the modern data stack also promotes ELT over ETL, which means transformation happens in the warehouse after data gets loaded in first.

Some of the important database concepts to grasp include:

Data engineers also need to understand how to work with different data sources. In modern data stack, platforms such as Apache Airbyte and Fivetran can be configured easily to connect to different data sources.

When data is not available via APIs or connectors, data engineers will be tasked to scrape data or write custom connectors. Writing custom connectors require basic sense of SDLC and code needs to be version controlled, tested, and maintained properly.

The modern data stack world is a pretty big believer of ELT (Extract-Load-Transform). Unlike the traditional ETL approach where data is transformed in specialized tools before loaded into the data warehouse. There’re a few advantages of ELT compared ETL:

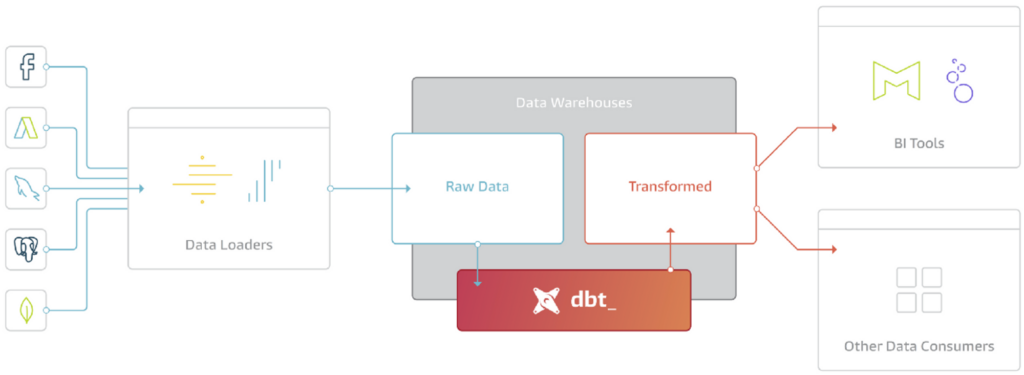

Tools like DBT has become an essential tool in the modern BI. dbt is basically the T in ELT. It doesn’t extract or load data, but it’s extremely good at transforming data that’s already loaded into your warehouse.

One of the main selling point of dbt is that it allows BI analysts to build complex data processing lineage and help manage the dependencies.

How data is structured and stored inside the database is at the core of a data warehouse. There are different approaches and people also follow different schools of thoughts. There are two popular approaches in the modern data warehouse:

OBT is very popular and a preferred approach in the modern data stack. Many practitioners working with DBT would prefer building wide tables. The pros and cons of different approaches is worth a separate blog post. WeCloudData’s suggestion for analytics engineers is to spend more effort on learning and practicing data modelling and it will pay off!

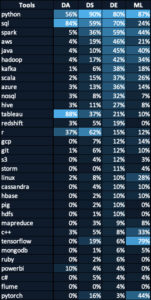

The table below compares the key stills among different data roles. As you will notice, Apache Spark (3rd row in this table) is very important skill for data engineers. This is because data engineers usually work with raw data that tends to be large-scale. The datasets used by data scientists are usually processed and trimmed down version.

Modern data engineers will definitely need to have big data skills under their belts. It is an imperative skill if one wants to work for big tech as a data engineer because you will most likely be dealing with massive data instead of the tiny million row tables. Yes, we’re talking about hundreds of billions and trillions of rows.

Of course, big data is not only associated with the word big. The 4 v’s of big data are:

Here’re some of the tech trends you need to keep in mind when you’re studying big data engineering.

Back in 2013/2014, Google published the Google File System and MapReduce paper. It led to the birth of the open source big data framework: Hadoop. At its core, Hadoop has a distributed file system HDFS and a distributed computation engine called MapReduce.

Hadoop, along with the NoSQL databases in its ecosystem have together led to the big data era. Numerous tools and companies were created and some are still growing as of today, such as Datastax, Databricks, Cloudera, etc.

Even though Hadoop is not the go-to big data platforms anymore in the modern cloud era, where AWS, GCP, Azure are the de-facto standards for scalable computing; and Apache Spark has also dethroned Hadoop MapReduce as the king of distributed processing engines; the concepts of distributed system and MapReduce are still very important foundational knowledge.

Suggestions for aspiring modern data engineers:

Data lake is not just a specific tool. It contains the big data tools in the ecosystem but also increasingly become a process or philosophy of big data processing.

Most people will associate data lake with the storage layer. For example, Amazon S3 is commonly used as the object store for big data. S3 is powering images, videos, and files of many successful social media platforms such as Instagram, Tiktok, etc.

WeCloudData’s suggestion for learning data lake technologies are as follows:

Spark is a distributed processing engine. It overtook Hadoop as one of the most popular engine. The commercial company Databricks has been widely successful in the industry. It started as a data processing tool and has since evolved into a one-size fit many type of unified analytics engine. Spark itself has an ecosystem that allows it to do many tasks such as ETL, streaming data analytics, machine learning and data science.

As a data engineer, Spark is definitely one of the most important skills to learn. Keep in mind that data engineers will need to learn Spark at a deeper level:

Common platforms that host Spark jobs include AWS’s Elastic MapReduce (EMR) service, Google Cloud’s Dataproc, Azure Databricks or Azure Synapse Analytics (Spark Pools), and Databrick’s Spark distribution. AWS Glue is also provides spark runtime in a serverless fashion.

Facebook open sourced both Apache Hive and Presto. It has a large base of SQL users and realized the importance of bringing SQL into the distributed systems such as Hadoop.

Hive is still run at large tech companies and big banks. It has started to become a legacy big data system. But it’s still a very powerful tool. It’s successor Presto DB has become a very popular open source project. Unlike Hive, it is a extremely fast distributed SQL engine that allows BI analysts and data engineers to run federated queries.

The founders of Presto has since started TrinoDB and Amazon has also created its variation called Athena, which is a very popular AWS query service.

Many big data applications require high velocity (low latency). When large number of events need to be processed in a very low-latency fashion, a distributed processing engine is usually required, such as Spark Streaming or Apache Flink. These tools usually consume data from a distributed messaging queue such as Apache Kafka. One of Kafka’s common use cases is relaying data among different systems and consumers pull data as topics from the message brokers. Kafka’s advantage is that it stores data on disks in the brokers for a given number of days and weeks so that it can replay the streams when necessary.

Data engineers don’t need to be the expert of configuring Kafka clusters, but knowing how to consume data from Kafka and how to ingest data into the Kafka queues can be very helpful.

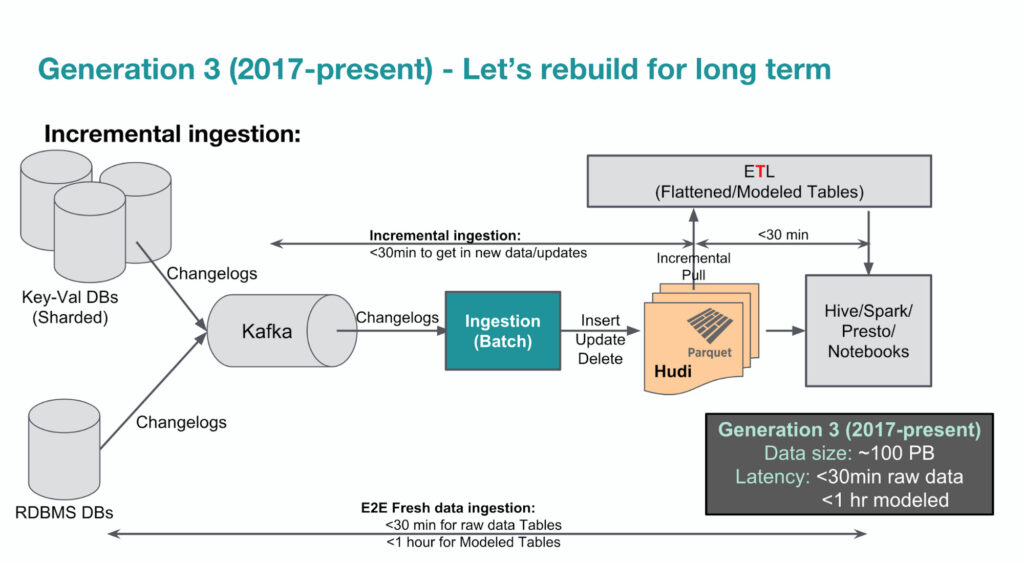

Data lake technologies have been around for many years. However, it hasn’t fully delivered the promise of big data to many companies. For example, data lakes don’t support ACID transactions which is common is the database world. It’s also hard to combine batch and streaming jobs in data lake and therefore, data engineer always need to maintain separate pipelines for batch and real-time. Due to these limitations, companies such as Databricks, Netflix, and Uber have created Lakehouse architectures that try to solve these challenges. Common lakehouse architectures include:

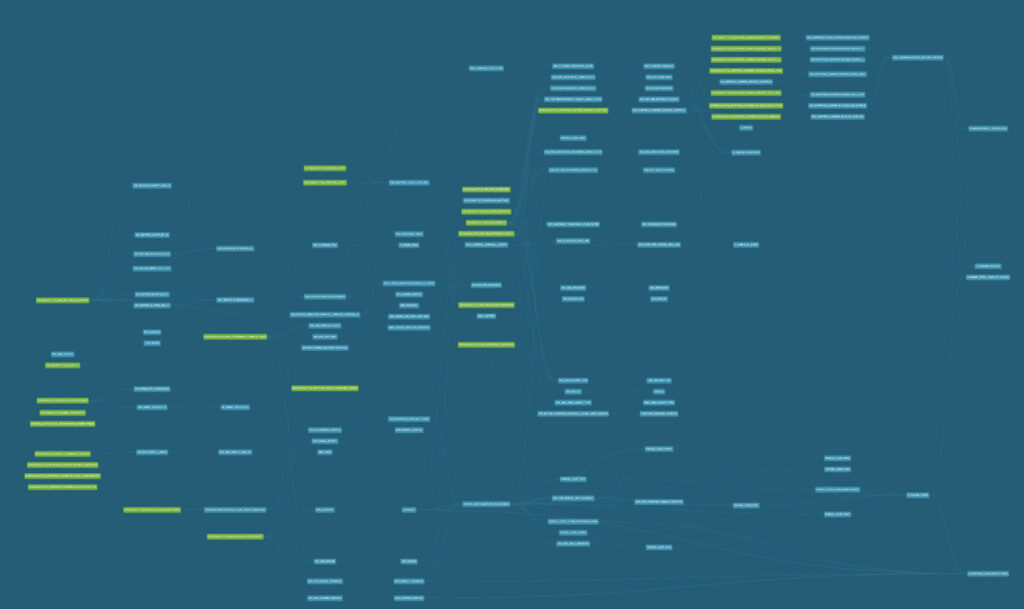

The diagram below shows Uber’s 3rd-gen big data platform since 2017. Apache Hudi has played an important role in terms of allowing incremental big data ingestion. WeCloudData suggests aspiring data engineers to at least get familiar with the concepts of Lakehouse and try out Apache Hudi or Iceberg.

Companies are dealing with different types of data sources and in order to create powerful insights, data from different sources usually need to be integrated. ETL is playing an important role in data integration and data engineers will be writing data pipelines for complex transformations.

As a data engineer, being able to write automated data pipelines is a very crucial skillset. Apache Airflow is probably by far the most popular data pipeline and lineage tool in terms of community. It’s developed in Python and can be think of CRON on steroids. In recent years, projects such as Dagster and Prefect are also becoming quite popular and are even preferred by some companies.

WeCloudData’s suggestion for aspiring data engineers about data pipelines:

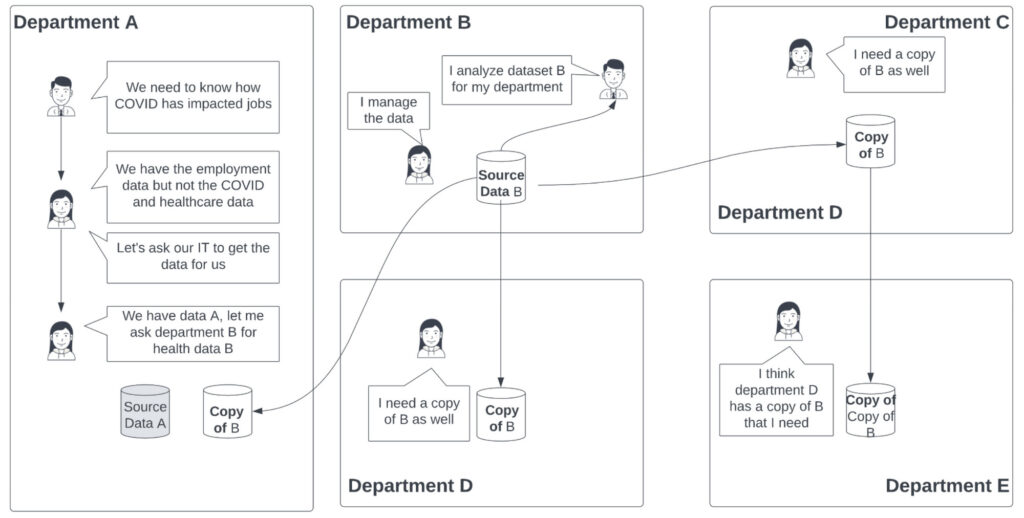

Data governance is probably a relatively more alien topic for many beginner data engineers. It usually involves people, process, and technology. It will be hard to self-learn because you probably won’t get a good use case to apply it on your own. The story below can help you understand some of the pain points and why governance is needed.

As you can see, things quickly become unmanageable.

Some of the important aspects of data governance include:

WeCloudData’s suggestion for aspiring data engineers:

Over the years WeCloudData has worked with many career switchers from non-tech background who successfully transitioned to data engineering. One common questions we receive from non-tech background professionals is how hard is it to switch to the data fields if one doesn’t come from coding and tech background.

Instead of giving a simple yes/or answer, we’d like to help everyone understand what type of challenges non-tech candidates will run into and what it takes to become a data engineer from scratch.

Below we will introduce the learning path and focuses for career switchers from IT backgrounds. It doesn’t apply to every single cases so only use it as a generic guide.

The rise of managed database services provided by AWS, GCP, Azure have reduced the need for database administrators. IT departments have been cutting their IT roles and DBAs are among those who are impacted.

DBAs have very specialized knowledge about particular database technologies. They understand database internals and management really well. This is their advantage when it comes to job switch to DE. However, an important duty of a data engineer is data processing (ETL, integration) and most DBAs don’t really directly work on data processing.

WeCloudData’s suggestion for DBAs

If you come from IT support background and have done much programming, WeCloudData would recommend the following path:

If you come from ETL background, your background is very relevant. You’ve basically already been working as a data engineer. However, you may have been dealing with legacy or traditional ETL tools such as data stage, SSIS, or Informatica. You probably don’t do a lot of Spark programming.

WeCloudData’s suggestion for ETL developers

Software developers in big tech may not get big salary jump when switching to data engineer jobs. However, there’s big demand for software engineers who can build data-intensive applications. So it’s not necessarily a career switch. Instead, it’s an up-skilling path.

Most software developers/engineers come from CS background and they have good experience with databases and SQL.

WeCloudData’s suggestion for SDEs switching to DE are as follows:

Platform engineers usually work with DevOps to deliver infrastructure for the data teams. Though they may be very familiar with different systems such as Spark, Hadoop, and Cloud, they usually don’t work closely with data.

WeCloudData’s suggestion for Platform/Infra engineers switching to DE:

A portfolio is a collection of projects, code, document, and other things that can help you showcase your skills. These usually go beyond degrees and certifications and show practical skills that the candidates have obtained.

A decent portfolio project usually take more effort than most learners would expect. It’s more than just uploading your code to github. Data engineering projects are not as easy to demonstrate as compared to a data science project that has more visual components.

Below is a list of things aspiring data engineers can work on to strength their profile.

WeCloudData’s suggestion

Traditional data engineers in big companies may just need to focus on very specific tasks. For example, as an ETL specialist the DE may work with Informatica to transform and prepare data in the Informatica environment. The job market is increasingly looking for candidates who know more tools and design patterns. Especially in startups and tech companies that build their infrastructure on public clouds, data engineers have access to an abundant amount of tools and they either need to know how to work with a lot of those tools, or have to learn very quickly.

Therefore, WeCloudData suggests that when learning data engineering and building your portfolio projects, it’s very important to build projects that are end-to-end.

What does end-to-end project mean? An end-to-end project covers the entire data pipeline, from data collection, ingestion, to cleaning/transformation (ETL/ELT), loading into data lake/warehouse, and reverse ETL. It will be challenging to build something of this scope for a learner but try to build a pipeline that’s as complex and complete as possible.

Only showing code/scripts of processing data for ETL is not going to give candidates a huge advantage in the job search process.

Keep in mind that hiring managers always want to know the following:

It’s very hard to explain the data pipeline and the best way is to create your pipeline diagram. Data engineers need to know how to work with diagraming tools such as draw.io or lucidchart to design pipeline/data architectures. It doesn’t have to be the most professional looking diagram but some key components need to be articulated. This will help the hiring managers understand what you did and leave a very strong impression. It can also be used as a potential tool during an interview for you to walk the hiring managers and senior data engineers through your project.

Nothing beats real hands-on experience! Hiring managers will always prefer to hire someone who has real experiences. Real experience doesn’t mean work experience, it could be self-guided projects, freelance projects, or some kind of real project experience working with clients.

In this section, we will discuss why having real project experience can help you stand out easily. Here’re some of the benefits of real client projects:

Let’s try to understand the important factors in hiring decisions:

For career switchers coming from non-data and non-tech background, your past work experience doesn’t carry much weight and often times creates disadvantages. It’s pretty easy for hiring managers to make biased assumptions that you’re not a good fit without even looking into your skills and capabilities. Working on real client projects means the work you do will carry more weight on your resume and help build more trust. Employers will have more confidence when they are deciding among different candidates. You will also learn things that you don’t have the opportunities to practice yourself:

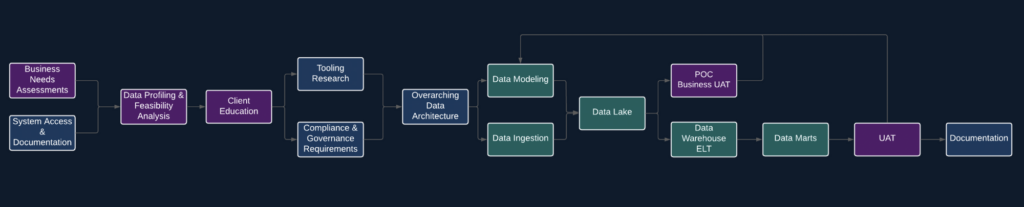

Here’s a sample data warehousing project workflow.

A project team is tasked with creating a modern data warehouse in the Azure cloud. The project managers, data engineers, IT/platform engineers need to work together to make the following things happen for the company/client:

Do know where to get started from? Get some inspiration from WeCloudData students/alumni. You can find some project demos on our youtube channel: https://youtube.com/@weclouddata

"*" indicates required fields

WeCloudData is the leading data science and AI academy. Our blended learning courses have helped thousands of learners and many enterprises make successful leaps in their data journeys.

"*" indicates required fields

Canada:

180 Bloor St W #1003

Toronto, ON, Canada M5S 2V6

US:

16192 Coastal Hwy

Lewes, DE 19958, USA